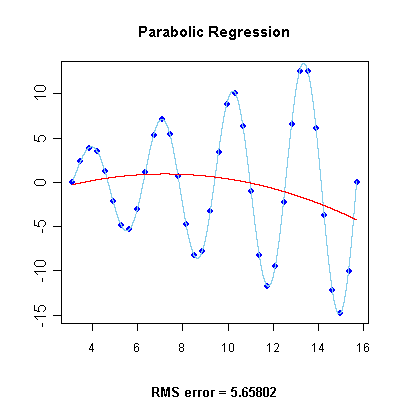

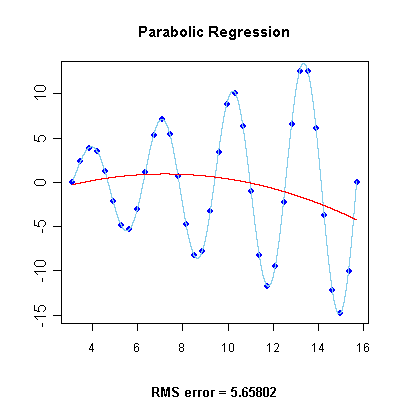

Stepwise Regression Step by Step Example. Stochastic gradient descent is not used to calculate the coefficients for linear regression in practice (in most cases). We will take an example of teen birth rate and poverty level data. A Little Bit About the Math. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). Linear Regression is a fundamental machine learning algorithm used to predict a numeric dependent variable based on one or more independent variables. Simple regression has one dependent variable (interval or ratio), one independent variable (interval or ratio or dichotomous). Logistic Regression (aka logit, MaxEnt) classifier. 2 in Regression Analysis by Example, 3rd ed. Dont use this parameter unless you know what you do. y is the output which is determined by input x. Linear Regression with Pytorch. The least-squares method represents the algorithm that minimizes the above term, RSS. a model that assumes a linear relationship between the input variables (x) and the single output variable (y). Example of Non-Linear Regression in R. As a practical demonstration of non-linear regression in R. Let us implement the Michaelis Menten model in R. It is mostly used for finding out the relationship between variables and forecasting. Linear Regression is a supervised learning algorithm which is both a statistical and a machine learning algorithm. Linear regression is the mathematical technique to guess the future outputs based on the past data . In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. "Simple Linear Regression." y is the output which is determined by input x. For example, we are given some data points of x and corresponding y and we need to learn the relationship between them that is called a hypothesis. The script shown in the steps below is main.py which resides in the GitHub repository and is forked from the Dive Into Deep learning example repository. So here, the salary of an employee or person will be your dependent variable. Linear Regression is a good example for start to Artificial Intelligence. check_input bool, default=True. As an example of OLS, we can perform a linear regression on real-world data which has duration and calories burned for 15000 exercise observations. Linear regression is a linear system and the coefficients can be calculated analytically using linear algebra. A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. 2. The linear Regression algorithm performs better when there is a continuous relationship between the inputs and output. Linear regression quantifies the relationship between one or more predictor variable(s) and one outcome variable. In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. What is Linear Regression ? In the multiclass case, the training algorithm uses the one-vs-rest (OvR) scheme if the multi_class option is set to ovr, and uses the cross-entropy loss if the multi_class option is set to multinomial. Fitted estimator. Now, lets talk about implementing a linear regression model using PyTorch. The example can be measuring a childs height every year of growth. The linear Regression algorithm performs better when there is a continuous relationship between the inputs and output. y = c + ax c = constant a = slope. Given by: y = a + b * x. Linear regression also tends to work well on high-dimensional, sparse data sets lacking complexity. Ch. The output varies linearly based upon the input. Coordinate descent is an algorithm that considers each column of data at a time hence it will automatically convert the X input as a Fortran-contiguous numpy array if necessary. c = constant and a is the slope of the line. The script shown in the steps below is main.py which resides in the GitHub repository and is forked from the Dive Into Deep learning example repository. Linear Regression. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Linear regression is a prediction method that is more than 200 years old. This dataset of size n = 51 is for the 50 states and the District of Columbia in the United States (poverty.txt). Now, lets move towards understanding simple linear regression with the help of an example. Linear Regression is a very common statistical method that allows us to learn a function or relationship from a given set of continuous data. Decision tree classifier. Implementing Bayesian Linear Regression. Provides detailed reference material for using SAS/STAT software to perform statistical analyses, including analysis of variance, regression, categorical data analysis, multivariate analysis, survival analysis, psychometric analysis, cluster analysis, nonparametric analysis, mixed-models analysis, and survey data analysis, with numerous examples in addition to syntax and usage information. This dataset of size n = 51 is for the 50 states and the District of Columbia in the United States (poverty.txt). In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. Now, lets talk about implementing a linear regression model using PyTorch. y is the output we want. 2. Means based on the displacement almost 65% of the model variability is explained. Below are the 5 types of Linear regression: 1. We will take an example of teen birth rate and poverty level data. y = c + ax c = constant a = slope. Variables selection is an important part to fit a model. The dependent variable (Y) should be continuous. Linear regression quantifies the relationship between one or more predictor variable(s) and one outcome variable. The coefficients used in simple linear regression can be found using stochastic gradient descent. The coefficients used in simple linear regression can be found using stochastic gradient descent. "Simple Linear Regression." check_input bool, default=True. Transforming the Variables with Log Functions in Linear Regression. Example of Non-Linear Regression in R. As a practical demonstration of non-linear regression in R. Let us implement the Michaelis Menten model in R. A Little Bit About the Math. We will take an example of teen birth rate and poverty level data. It is mostly used for finding out the relationship between variables and forecasting.

Implementation of Linear Regression In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. Implementing Bayesian Linear Regression. As an example of OLS, we can perform a linear regression on real-world data which has duration and calories burned for 15000 exercise observations. Multiple Linear Regression. Ch. Linear Regression is a very common statistical method that allows us to learn a function or relationship from a given set of continuous data. Examples. In the multiclass case, the training algorithm uses the one-vs-rest (OvR) scheme if the multi_class option is set to ovr, and uses the cross-entropy loss if the multi_class option is set to multinomial. The linear Regression algorithm performs better when there is a continuous relationship between the inputs and output. A relationship between variables Y and X is represented by this equation: Y`i = mX + b. Logistic Regression (aka logit, MaxEnt) classifier. Multiple Linear Regression. It is used to predict the real-valued output y based on the given input value x. considered as y=mx+c, then it is Simple Linear Regression.

x is the input variable. Linear regression hypothesis testing example: This blog post explains concepts in relation to how T-tests and F-tests are used to test different hypotheses in relation to the linear regression model. Linear regression is still a good choice when you want a simple model for a basic predictive task. Allow to bypass several input checking. 2. Linear regression hypothesis testing example: This blog post explains concepts in relation to how T-tests and F-tests are used to test different hypotheses in relation to the linear regression model. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. Types of Linear Regression. What is Linear Regression ? It can also learn a low-dimensional linear projection of data that can be used for data visualization and fast classification. considered as y=mx+c, then it is Simple Linear Regression. Linear regression is still a good choice when you want a simple model for a basic predictive task. In the above illustrating figure, we consider some points from a randomly generated dataset. Linear regression is the mathematical technique to guess the future outputs based on the past data . Allow to bypass several input checking. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. More information about the spark.ml implementation can be found further in the section on decision trees.. Simple Linear Regression. In this tutorial, you will discover how to implement the simple linear regression algorithm from scratch in Python. For example, the nonlinear function: Y=e B0 X 1 B1 X 2 B2. The LinearRegression() function from sklearn.linear_regression module to fit a linear regression model. Predicted mpg values are almost 65% close (or matching with) to the actual mpg values. Coordinate descent is an algorithm that considers each column of data at a time hence it will automatically convert the X input as a Fortran-contiguous numpy array if necessary. Examples. a model that assumes a linear relationship between the input variables (x) and the single output variable (y). So here, the salary of an employee or person will be your dependent variable. Allow to bypass several input checking. In simple linear regression, we have the equation: y = m*x + c. For multiple linear regression, we have the equation: y = m1x1 + m2x2 + m3x3 +.. + c. Here, we have multiple independent variables, x1, x2 and x3, and multiple slopes, m1, m2, m3 and so on. What is Linear Regression ? Multiple Linear Regression. Linear regression is a linear system and the coefficients can be calculated analytically using linear algebra. y is the output which is determined by input x. It can also learn a low-dimensional linear projection of data that can be used for data visualization and fast classification. Examples of Simple Linear Regression . In the above illustrating figure, we consider some points from a randomly generated dataset. Means based on the displacement almost 65% of the model variability is explained. In statistics, simple linear regression is a linear regression model with a single explanatory variable. For example, we are given some data points of x and corresponding y and we need to learn the relationship between them that is called a hypothesis. Example of Non-Linear Regression in R. As a practical demonstration of non-linear regression in R. Let us implement the Michaelis Menten model in R. Implementing Bayesian Linear Regression. c = constant and a is the slope of the line. Linear regression is a linear model, e.g. Linear Regression with Pytorch. The output varies linearly based upon the input. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. Transforming the Variables with Log Functions in Linear Regression. If the graph is scattered and shows no relationship, it is recommended not to use a Linear Regression algorithm. Linear regression is a linear model, e.g. The example can be measuring a childs height every year of growth. The algorithm directly maximizes a stochastic variant of the leave-one-out k-nearest neighbors (KNN) score on the training set. Linear regression is the mathematical technique to guess the future outputs based on the past data . If the graph is scattered and shows no relationship, it is recommended not to use a Linear Regression algorithm. A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. The reason is because linear regression has been around for so long (more than 200 years). A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. It has been studied from every possible angle and often each angle has a new and different name. Regression models a target prediction value based on independent variables. Given by: y = a + b * x. The stepwise regression will perform the searching process automatically. It performs a regression task. The reason is because linear regression has been around for so long (more than 200 years). Prerequisite: Linear Regression Linear Regression is a machine learning algorithm based on supervised learning. Simple regression has one dependent variable (interval or ratio), one independent variable (interval or ratio or dichotomous). Google Image. It is used to predict the real-valued output y based on the given input value x. check_input bool, default=True. The script shown in the steps below is main.py which resides in the GitHub repository and is forked from the Dive Into Deep learning example repository. Returns self object. The above figure shows a simple linear regression. Below are the 5 types of Linear regression: 1. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. line equation is considered as y = ax 1 +bx 2 +nx n, then it is Multiple Linear Regression.Various techniques are utilized to prepare or train the regression equation from data, and the most common one among them is called Ordinary Least Squares. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. It performs a regression task. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. Decision trees are a popular family of classification and regression methods. Linear regression is commonly used for predictive analysis and modeling. Notes. Decision trees are a popular family of classification and regression methods. Multiple Linear Regression. The algorithm directly maximizes a stochastic variant of the leave-one-out k-nearest neighbors (KNN) score on the training set. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). The above figure shows a simple linear regression. considered as y=mx+c, then it is Simple Linear Regression. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Predicted mpg values are almost 65% close (or matching with) to the actual mpg values. It depicts the relationship between the dependent variable y and the independent variables x i ( or features ). In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. The dependent variable (Y) should be continuous. We have seen equation like below in maths classes. The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the residuals (a residual being the difference between an observed value and the fitted value provided by a model) made in the results of each Stepwise Regression Step by Step Example. We have seen equation like below in maths classes. We suggest you always analyze the data before applying a linear regression algorithm. It depicts the relationship between the dependent variable y and the independent variables x i ( or features ). In this equation, Y is the dependent variable or the variable we are trying to predict or estimate; X is the independent variable the variable we are using to make predictions; m is the slope of the regression line it represent the effect X has on Y. In this tutorial, you will discover how to implement the simple linear regression algorithm from scratch in Python. Linear regression also tends to work well on high-dimensional, sparse data sets lacking complexity. line equation is considered as y = ax 1 +bx 2 +nx n, then it is Multiple Linear Regression.Various techniques are utilized to prepare or train the regression equation from data, and the most common one among them is called Ordinary Least Squares. Notes. More information about the spark.ml implementation can be found further in the section on decision trees.. A fitted linear regression model can be used to identify the relationship between a single predictor variable x j and the response variable y when all the other predictor variables in the model are "held fixed". So here, the salary of an employee or person will be your dependent variable. The line represents the regression line. Provides detailed reference material for using SAS/STAT software to perform statistical analyses, including analysis of variance, regression, categorical data analysis, multivariate analysis, survival analysis, psychometric analysis, cluster analysis, nonparametric analysis, mixed-models analysis, and survey data analysis, with numerous examples in addition to syntax and usage information. It is mostly used for finding out the relationship between variables and forecasting. In the above illustrating figure, we consider some points from a randomly generated dataset. In statistics, simple linear regression is a linear regression model with a single explanatory variable. Linear Regression is a supervised learning algorithm which is both a statistical and a machine learning algorithm. The LinearRegression() function from sklearn.linear_regression module to fit a linear regression model. Prerequisite: Linear Regression Linear Regression is a machine learning algorithm based on supervised learning. Linear Regression is a machine learning algorithm based on supervised learning. Simple Linear Regression. In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. Returns self object. In this equation, Y is the dependent variable or the variable we are trying to predict or estimate; X is the independent variable the variable we are using to make predictions; m is the slope of the regression line it represent the effect X has on Y. The line represents the regression line. In simple linear regression, we have the equation: y = m*x + c. For multiple linear regression, we have the equation: y = m1x1 + m2x2 + m3x3 +.. + c. Here, we have multiple independent variables, x1, x2 and x3, and multiple slopes, m1, m2, m3 and so on. Stochastic gradient descent is not used to calculate the coefficients for linear regression in practice (in most cases). Logistic Regression (aka logit, MaxEnt) classifier. Types of Linear Regression. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. Simple Linear Regression. New York: Wiley, pp. The stepwise regression will perform the searching process automatically. The coefficients used in simple linear regression can be found using stochastic gradient descent.

Implementation of Linear Regression In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. Implementing Bayesian Linear Regression. As an example of OLS, we can perform a linear regression on real-world data which has duration and calories burned for 15000 exercise observations. Multiple Linear Regression. Ch. Linear Regression is a very common statistical method that allows us to learn a function or relationship from a given set of continuous data. Examples. In the multiclass case, the training algorithm uses the one-vs-rest (OvR) scheme if the multi_class option is set to ovr, and uses the cross-entropy loss if the multi_class option is set to multinomial. The linear Regression algorithm performs better when there is a continuous relationship between the inputs and output. A relationship between variables Y and X is represented by this equation: Y`i = mX + b. Logistic Regression (aka logit, MaxEnt) classifier. Multiple Linear Regression. It is used to predict the real-valued output y based on the given input value x. considered as y=mx+c, then it is Simple Linear Regression.

Implementation of Linear Regression In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. Implementing Bayesian Linear Regression. As an example of OLS, we can perform a linear regression on real-world data which has duration and calories burned for 15000 exercise observations. Multiple Linear Regression. Ch. Linear Regression is a very common statistical method that allows us to learn a function or relationship from a given set of continuous data. Examples. In the multiclass case, the training algorithm uses the one-vs-rest (OvR) scheme if the multi_class option is set to ovr, and uses the cross-entropy loss if the multi_class option is set to multinomial. The linear Regression algorithm performs better when there is a continuous relationship between the inputs and output. A relationship between variables Y and X is represented by this equation: Y`i = mX + b. Logistic Regression (aka logit, MaxEnt) classifier. Multiple Linear Regression. It is used to predict the real-valued output y based on the given input value x. considered as y=mx+c, then it is Simple Linear Regression.  x is the input variable. Linear regression hypothesis testing example: This blog post explains concepts in relation to how T-tests and F-tests are used to test different hypotheses in relation to the linear regression model. Linear regression is still a good choice when you want a simple model for a basic predictive task. Allow to bypass several input checking. 2. Linear regression hypothesis testing example: This blog post explains concepts in relation to how T-tests and F-tests are used to test different hypotheses in relation to the linear regression model. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. Types of Linear Regression. What is Linear Regression ? It can also learn a low-dimensional linear projection of data that can be used for data visualization and fast classification. considered as y=mx+c, then it is Simple Linear Regression. Linear regression is still a good choice when you want a simple model for a basic predictive task. In the above illustrating figure, we consider some points from a randomly generated dataset. Linear regression is the mathematical technique to guess the future outputs based on the past data . Allow to bypass several input checking. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. More information about the spark.ml implementation can be found further in the section on decision trees.. Simple Linear Regression. In this tutorial, you will discover how to implement the simple linear regression algorithm from scratch in Python. For example, the nonlinear function: Y=e B0 X 1 B1 X 2 B2. The LinearRegression() function from sklearn.linear_regression module to fit a linear regression model. Predicted mpg values are almost 65% close (or matching with) to the actual mpg values. Coordinate descent is an algorithm that considers each column of data at a time hence it will automatically convert the X input as a Fortran-contiguous numpy array if necessary. Examples. a model that assumes a linear relationship between the input variables (x) and the single output variable (y). So here, the salary of an employee or person will be your dependent variable. Allow to bypass several input checking. In simple linear regression, we have the equation: y = m*x + c. For multiple linear regression, we have the equation: y = m1x1 + m2x2 + m3x3 +.. + c. Here, we have multiple independent variables, x1, x2 and x3, and multiple slopes, m1, m2, m3 and so on. What is Linear Regression ? Multiple Linear Regression. Linear regression is a linear system and the coefficients can be calculated analytically using linear algebra. y is the output which is determined by input x. It can also learn a low-dimensional linear projection of data that can be used for data visualization and fast classification. Examples of Simple Linear Regression . In the above illustrating figure, we consider some points from a randomly generated dataset. Means based on the displacement almost 65% of the model variability is explained. In statistics, simple linear regression is a linear regression model with a single explanatory variable. For example, we are given some data points of x and corresponding y and we need to learn the relationship between them that is called a hypothesis. Example of Non-Linear Regression in R. As a practical demonstration of non-linear regression in R. Let us implement the Michaelis Menten model in R. Implementing Bayesian Linear Regression. c = constant and a is the slope of the line. Linear regression is a linear model, e.g. Linear Regression with Pytorch. The output varies linearly based upon the input. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. Transforming the Variables with Log Functions in Linear Regression. If the graph is scattered and shows no relationship, it is recommended not to use a Linear Regression algorithm. Linear regression is a linear model, e.g. The example can be measuring a childs height every year of growth. The algorithm directly maximizes a stochastic variant of the leave-one-out k-nearest neighbors (KNN) score on the training set. Linear regression is the mathematical technique to guess the future outputs based on the past data . If the graph is scattered and shows no relationship, it is recommended not to use a Linear Regression algorithm. A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. The reason is because linear regression has been around for so long (more than 200 years). A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. It has been studied from every possible angle and often each angle has a new and different name. Regression models a target prediction value based on independent variables. Given by: y = a + b * x. The stepwise regression will perform the searching process automatically. It performs a regression task. The reason is because linear regression has been around for so long (more than 200 years). Prerequisite: Linear Regression Linear Regression is a machine learning algorithm based on supervised learning. Simple regression has one dependent variable (interval or ratio), one independent variable (interval or ratio or dichotomous). Google Image. It is used to predict the real-valued output y based on the given input value x. check_input bool, default=True. The script shown in the steps below is main.py which resides in the GitHub repository and is forked from the Dive Into Deep learning example repository. Returns self object. The above figure shows a simple linear regression. Below are the 5 types of Linear regression: 1. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. line equation is considered as y = ax 1 +bx 2 +nx n, then it is Multiple Linear Regression.Various techniques are utilized to prepare or train the regression equation from data, and the most common one among them is called Ordinary Least Squares. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. It performs a regression task. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. Decision trees are a popular family of classification and regression methods. Linear regression is commonly used for predictive analysis and modeling. Notes. Decision trees are a popular family of classification and regression methods. Multiple Linear Regression. The algorithm directly maximizes a stochastic variant of the leave-one-out k-nearest neighbors (KNN) score on the training set. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). The above figure shows a simple linear regression. considered as y=mx+c, then it is Simple Linear Regression. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Predicted mpg values are almost 65% close (or matching with) to the actual mpg values. It depicts the relationship between the dependent variable y and the independent variables x i ( or features ). In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. The dependent variable (Y) should be continuous. We have seen equation like below in maths classes. The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the residuals (a residual being the difference between an observed value and the fitted value provided by a model) made in the results of each Stepwise Regression Step by Step Example. We have seen equation like below in maths classes. We suggest you always analyze the data before applying a linear regression algorithm. It depicts the relationship between the dependent variable y and the independent variables x i ( or features ). In this equation, Y is the dependent variable or the variable we are trying to predict or estimate; X is the independent variable the variable we are using to make predictions; m is the slope of the regression line it represent the effect X has on Y. In this tutorial, you will discover how to implement the simple linear regression algorithm from scratch in Python. Linear regression also tends to work well on high-dimensional, sparse data sets lacking complexity. line equation is considered as y = ax 1 +bx 2 +nx n, then it is Multiple Linear Regression.Various techniques are utilized to prepare or train the regression equation from data, and the most common one among them is called Ordinary Least Squares. Notes. More information about the spark.ml implementation can be found further in the section on decision trees.. A fitted linear regression model can be used to identify the relationship between a single predictor variable x j and the response variable y when all the other predictor variables in the model are "held fixed". So here, the salary of an employee or person will be your dependent variable. The line represents the regression line. Provides detailed reference material for using SAS/STAT software to perform statistical analyses, including analysis of variance, regression, categorical data analysis, multivariate analysis, survival analysis, psychometric analysis, cluster analysis, nonparametric analysis, mixed-models analysis, and survey data analysis, with numerous examples in addition to syntax and usage information. It is mostly used for finding out the relationship between variables and forecasting. In the above illustrating figure, we consider some points from a randomly generated dataset. In statistics, simple linear regression is a linear regression model with a single explanatory variable. Linear Regression is a supervised learning algorithm which is both a statistical and a machine learning algorithm. The LinearRegression() function from sklearn.linear_regression module to fit a linear regression model. Prerequisite: Linear Regression Linear Regression is a machine learning algorithm based on supervised learning. Linear Regression is a machine learning algorithm based on supervised learning. Simple Linear Regression. In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. Returns self object. In this equation, Y is the dependent variable or the variable we are trying to predict or estimate; X is the independent variable the variable we are using to make predictions; m is the slope of the regression line it represent the effect X has on Y. The line represents the regression line. In simple linear regression, we have the equation: y = m*x + c. For multiple linear regression, we have the equation: y = m1x1 + m2x2 + m3x3 +.. + c. Here, we have multiple independent variables, x1, x2 and x3, and multiple slopes, m1, m2, m3 and so on. Stochastic gradient descent is not used to calculate the coefficients for linear regression in practice (in most cases). Logistic Regression (aka logit, MaxEnt) classifier. Types of Linear Regression. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. Simple Linear Regression. New York: Wiley, pp. The stepwise regression will perform the searching process automatically. The coefficients used in simple linear regression can be found using stochastic gradient descent.

x is the input variable. Linear regression hypothesis testing example: This blog post explains concepts in relation to how T-tests and F-tests are used to test different hypotheses in relation to the linear regression model. Linear regression is still a good choice when you want a simple model for a basic predictive task. Allow to bypass several input checking. 2. Linear regression hypothesis testing example: This blog post explains concepts in relation to how T-tests and F-tests are used to test different hypotheses in relation to the linear regression model. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. Types of Linear Regression. What is Linear Regression ? It can also learn a low-dimensional linear projection of data that can be used for data visualization and fast classification. considered as y=mx+c, then it is Simple Linear Regression. Linear regression is still a good choice when you want a simple model for a basic predictive task. In the above illustrating figure, we consider some points from a randomly generated dataset. Linear regression is the mathematical technique to guess the future outputs based on the past data . Allow to bypass several input checking. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. More information about the spark.ml implementation can be found further in the section on decision trees.. Simple Linear Regression. In this tutorial, you will discover how to implement the simple linear regression algorithm from scratch in Python. For example, the nonlinear function: Y=e B0 X 1 B1 X 2 B2. The LinearRegression() function from sklearn.linear_regression module to fit a linear regression model. Predicted mpg values are almost 65% close (or matching with) to the actual mpg values. Coordinate descent is an algorithm that considers each column of data at a time hence it will automatically convert the X input as a Fortran-contiguous numpy array if necessary. Examples. a model that assumes a linear relationship between the input variables (x) and the single output variable (y). So here, the salary of an employee or person will be your dependent variable. Allow to bypass several input checking. In simple linear regression, we have the equation: y = m*x + c. For multiple linear regression, we have the equation: y = m1x1 + m2x2 + m3x3 +.. + c. Here, we have multiple independent variables, x1, x2 and x3, and multiple slopes, m1, m2, m3 and so on. What is Linear Regression ? Multiple Linear Regression. Linear regression is a linear system and the coefficients can be calculated analytically using linear algebra. y is the output which is determined by input x. It can also learn a low-dimensional linear projection of data that can be used for data visualization and fast classification. Examples of Simple Linear Regression . In the above illustrating figure, we consider some points from a randomly generated dataset. Means based on the displacement almost 65% of the model variability is explained. In statistics, simple linear regression is a linear regression model with a single explanatory variable. For example, we are given some data points of x and corresponding y and we need to learn the relationship between them that is called a hypothesis. Example of Non-Linear Regression in R. As a practical demonstration of non-linear regression in R. Let us implement the Michaelis Menten model in R. Implementing Bayesian Linear Regression. c = constant and a is the slope of the line. Linear regression is a linear model, e.g. Linear Regression with Pytorch. The output varies linearly based upon the input. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. Transforming the Variables with Log Functions in Linear Regression. If the graph is scattered and shows no relationship, it is recommended not to use a Linear Regression algorithm. Linear regression is a linear model, e.g. The example can be measuring a childs height every year of growth. The algorithm directly maximizes a stochastic variant of the leave-one-out k-nearest neighbors (KNN) score on the training set. Linear regression is the mathematical technique to guess the future outputs based on the past data . If the graph is scattered and shows no relationship, it is recommended not to use a Linear Regression algorithm. A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. The reason is because linear regression has been around for so long (more than 200 years). A log transformation is a relatively common method that allows linear regression to perform curve fitting that would otherwise only be possible in nonlinear regression. It has been studied from every possible angle and often each angle has a new and different name. Regression models a target prediction value based on independent variables. Given by: y = a + b * x. The stepwise regression will perform the searching process automatically. It performs a regression task. The reason is because linear regression has been around for so long (more than 200 years). Prerequisite: Linear Regression Linear Regression is a machine learning algorithm based on supervised learning. Simple regression has one dependent variable (interval or ratio), one independent variable (interval or ratio or dichotomous). Google Image. It is used to predict the real-valued output y based on the given input value x. check_input bool, default=True. The script shown in the steps below is main.py which resides in the GitHub repository and is forked from the Dive Into Deep learning example repository. Returns self object. The above figure shows a simple linear regression. Below are the 5 types of Linear regression: 1. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. line equation is considered as y = ax 1 +bx 2 +nx n, then it is Multiple Linear Regression.Various techniques are utilized to prepare or train the regression equation from data, and the most common one among them is called Ordinary Least Squares. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. It performs a regression task. Simple linear regression is a great first machine learning algorithm to implement as it requires you to estimate properties from your training dataset, but is simple enough for beginners to understand. Decision trees are a popular family of classification and regression methods. Linear regression is commonly used for predictive analysis and modeling. Notes. Decision trees are a popular family of classification and regression methods. Multiple Linear Regression. The algorithm directly maximizes a stochastic variant of the leave-one-out k-nearest neighbors (KNN) score on the training set. For example, it can be used to quantify the relative impacts of age, gender, and diet (the predictor variables) on height (the outcome variable). The above figure shows a simple linear regression. considered as y=mx+c, then it is Simple Linear Regression. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Where y is the dependent variable (DV): For e.g., how the salary of a person changes depending on the number of years of experience that the employee has. Predicted mpg values are almost 65% close (or matching with) to the actual mpg values. It depicts the relationship between the dependent variable y and the independent variables x i ( or features ). In this tutorial I explain how to build linear regression in Julia, with full-fledged post model-building diagnostics. The dependent variable (Y) should be continuous. We have seen equation like below in maths classes. The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the residuals (a residual being the difference between an observed value and the fitted value provided by a model) made in the results of each Stepwise Regression Step by Step Example. We have seen equation like below in maths classes. We suggest you always analyze the data before applying a linear regression algorithm. It depicts the relationship between the dependent variable y and the independent variables x i ( or features ). In this equation, Y is the dependent variable or the variable we are trying to predict or estimate; X is the independent variable the variable we are using to make predictions; m is the slope of the regression line it represent the effect X has on Y. In this tutorial, you will discover how to implement the simple linear regression algorithm from scratch in Python. Linear regression also tends to work well on high-dimensional, sparse data sets lacking complexity. line equation is considered as y = ax 1 +bx 2 +nx n, then it is Multiple Linear Regression.Various techniques are utilized to prepare or train the regression equation from data, and the most common one among them is called Ordinary Least Squares. Notes. More information about the spark.ml implementation can be found further in the section on decision trees.. A fitted linear regression model can be used to identify the relationship between a single predictor variable x j and the response variable y when all the other predictor variables in the model are "held fixed". So here, the salary of an employee or person will be your dependent variable. The line represents the regression line. Provides detailed reference material for using SAS/STAT software to perform statistical analyses, including analysis of variance, regression, categorical data analysis, multivariate analysis, survival analysis, psychometric analysis, cluster analysis, nonparametric analysis, mixed-models analysis, and survey data analysis, with numerous examples in addition to syntax and usage information. It is mostly used for finding out the relationship between variables and forecasting. In the above illustrating figure, we consider some points from a randomly generated dataset. In statistics, simple linear regression is a linear regression model with a single explanatory variable. Linear Regression is a supervised learning algorithm which is both a statistical and a machine learning algorithm. The LinearRegression() function from sklearn.linear_regression module to fit a linear regression model. Prerequisite: Linear Regression Linear Regression is a machine learning algorithm based on supervised learning. Linear Regression is a machine learning algorithm based on supervised learning. Simple Linear Regression. In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. Returns self object. In this equation, Y is the dependent variable or the variable we are trying to predict or estimate; X is the independent variable the variable we are using to make predictions; m is the slope of the regression line it represent the effect X has on Y. The line represents the regression line. In simple linear regression, we have the equation: y = m*x + c. For multiple linear regression, we have the equation: y = m1x1 + m2x2 + m3x3 +.. + c. Here, we have multiple independent variables, x1, x2 and x3, and multiple slopes, m1, m2, m3 and so on. Stochastic gradient descent is not used to calculate the coefficients for linear regression in practice (in most cases). Logistic Regression (aka logit, MaxEnt) classifier. Types of Linear Regression. We considered a simple linear regression in any machine learning algorithm using example, Now, suppose if we take a scenario of house price where our x-axis is the size of the house and the y-axis is basically the price of the house. Simple Linear Regression. New York: Wiley, pp. The stepwise regression will perform the searching process automatically. The coefficients used in simple linear regression can be found using stochastic gradient descent.