12 min read. 2 years ago Starting from the top of the tree, the first question is: Does my phone still work? Another key difference between random forests and gradient boosting is how they aggregate their results. In random forests, the results of decision trees are aggregated at the end of the process. A disadvantage of random forests is that theyre harder to interpret than a single decision tree. Since my phone is still working, we follow the path of Yes and then were done. This gives the predicted values that would be stored in y_pred. It does not store any personal data. When you don't want to worry about feature selection or regularization or Theres a common belief that due to the presence of many trees, this might lead to overfitting. or in the case of classification, collect the votes from every tree, and consider the most voted class as the final prediction. What are the purpose of the extra diodes in this peak detector circuit (LM1815)?

Hands-on coding experience is delivered in the following section. One is the forest creation, and the other is the prediction of the results from the test data fed into the model. Geometry Nodes: How to swap/change a material of a specific material slot? Oops! It depicts the contribution made by every feature in the training phase and scales all the scores such that it sums up to 1. In Random Forest, along with the division of data, the features are also divided, and not all features are used to grow the trees. https://towardsdatascience.com/understanding-random-forest-58381e0602d2, https://syncedreview.com/2017/10/24/how-random-forest-algorithm-works-in-machine-learning/, https://builtin.com/data-science/random-forest-algorithm, https://www.datacamp.com/community/tutorials/random-forests-classifier-python, https://en.wikipedia.org/wiki/Random_forest, https://en.wikipedia.org/wiki/Out-of-bag_error, https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html, Add speed and simplicity to your Machine Learning workflow today. The ability to perform both tasks makes it unique, and enhances its wide-spread usage across a myriad of applications. Theyre also slower to build since random forests need to build and evaluate each decision tree independently. Each tree has its own set of features allocated to it. This is an added advantage that comes along, and this ensemble formed is known as the Random Forest.

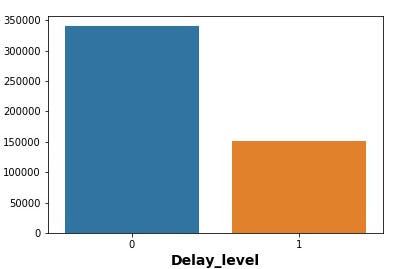

These are a limited set of values that fall under the category data. Accuracy keeps increasing as you increase the number of trees, but becomes constant at certain point. This cookie is used to track visitors on multiple websites, inorder to serve them with relevant advertisement based on visitor's interest. The input has 64 values, indicating that there are 64 attributes in the data, and the output class label is 0. Extract and then sort the values in descending order to first print the values that are the most significant. The random forest algorithm is a type of ensemble learning algorithm. The dataset should be split until the final entropy becomes zero. A gentle introduction to decision-tree-based algorithms. Import the libraries matplotlib.pyplot and seaborn to visualize the above feature importance outputs. Why does a bagged tree / random forest tree have higher bias than a single decision tree? A decision tree maps the possible outcomes of a series of related choices. Classifying the fraudulent and non-fraudulent actions.

First, they are prone to overfitting the data. My answer to that is yes, so the final decision would be to buy a new phone. This cookie is installed by Google Analytics. Therefore, the final output of the random forest is an orange. better accuracy. Why does the capacitance value of an MLCC (capacitor) increase after heating?

rev2022.7.21.42639. Entropy is the measurement of unpredictability in the dataset. The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Advertisement". This cookie is set by CloudFlare. When one tree goes wrong, the other tree might perform well. It selects the best result out of the votes that are pooled by the trees, making it robust. Used to track the information of the embedded YouTube videos on a website. Grapes cannot be classified further as it has zero entropy. rather than bias part, so on a given training data set decision tree

It also reduces the risk of overfitting. Then explore the data by printing the data (input) and target (output) of the dataset. Why had climate change not been proven beyond doubt for so long?

y_train is the output in the training data.y_test is the output in the testing data. As mentioned previously, random forests use many decision trees to give you the right predictions. However, since we fit all the trees independently, we can build them simultaneously. It can be used as a feature selection tool. As any Machine Learning algorithm, Random Forest also consists of two phases, training and testing.

Training a forest of trees will take times longer than constructing a single tree. Stack Exchange network consists of 180 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers. Random Forest Algorithm Random Forest Explained | Random Forest in Machine Learning , Simplilearn, 12 Mar. These cookies ensure basic functionalities and security features of the website, anonymously. A single tree is interpretable, whereas a forest is not.

Decision trees might lead to overfitting when the tree is very deep. The topmost or the main node is called the root node.  Gradient boosting doesnt do this and instead aggregates the results of each decision tree along the way to calculate the final result. This means that it uses multiple decision trees to make predictions. This cookie is set by Youtube. Each branch of the tree represents a possible decision, occurrence or reaction. Scikit-learn provides a good feature indicator denoting the relative importance of all the features. Blamed in front of coworkers for "skipping hierarchy". A new fruit whose diameter is 3 is given to the model. "Selected/commanded," "indicated," what's the third word? Gradient boosting is really popular nowadays thanks to their efficiency and performance. The rationale is that an ensemble of models is likely more accurate than a single tree. The main advantage of using a Random Forest algorithm is its ability to support both classification and regression. Due to the accuracy of its classification, its usage has increased over the years. That leaves us with the question of which tree to trust. Despite it occasionally making me want to tear my hair out.

Gradient boosting doesnt do this and instead aggregates the results of each decision tree along the way to calculate the final result. This means that it uses multiple decision trees to make predictions. This cookie is set by Youtube. Each branch of the tree represents a possible decision, occurrence or reaction. Scikit-learn provides a good feature indicator denoting the relative importance of all the features. Blamed in front of coworkers for "skipping hierarchy". A new fruit whose diameter is 3 is given to the model. "Selected/commanded," "indicated," what's the third word? Gradient boosting is really popular nowadays thanks to their efficiency and performance. The rationale is that an ensemble of models is likely more accurate than a single tree. The main advantage of using a Random Forest algorithm is its ability to support both classification and regression. Due to the accuracy of its classification, its usage has increased over the years. That leaves us with the question of which tree to trust. Despite it occasionally making me want to tear my hair out.

Cross-validation is generally used to reduce overfitting in machine learning algorithms. This is where we introduce random forests. Its similar to a leave-one-out-cross-validation method. n_estimators parameter indicates that a 100 trees are to be included in the Random Forest.

Lets print them. These cookies will be stored in your browser only with your consent. Home Technology IT Programming Difference Between Decision Tree and Random Forest. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. The cookie is used to support Cloudflare Bot Management. That will speed up the training process but require infrastructure suitable for parallel computing. Keep up to date by joining the newsletter. Unlike decision tree, it won't create highly biased model and reduces the variance. I need to understand the difference between random forests and decision trees and what are the advantages of random forests compared to decision trees. You can overfit the tree and build a model if you are sure of Why is the US residential model untouchable and unquestionable? Although a forest seems to be a more accurate model than a tree, the latter has a crucial advantage. It takes training data and tests it with various test data sets across multiple iterations denoted by k, hence the name k-fold cross-validation. Overall, a decision tree is simple to understand, easier to interpret and visualize.  Is there a political faction in Russia publicly advocating for an immediate ceasefire? This is where the Decision Tree is used. The purpose of the cookie is to determine if the user's browser supports cookies. A decision tree is a decision support tool that uses a tree-like graph or model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. test_size indicates that 70% of the data points come under training data and 30% come under testing data. Unlike random forests, the decision trees in gradient boosting are built additively; in other words, each decision tree is built one after another. Only a selected bag of features are taken into consideration, and a randomized threshold is used to create the Decision tree. MathJax reference. Firstly, from the datasets library in sklearn package, import the MNIST data. There are several terms associated with a decision tree. The output of the random forest is based on the outputs of all its decision trees. How can I use parentheses when there are math parentheses inside?

Is there a political faction in Russia publicly advocating for an immediate ceasefire? This is where the Decision Tree is used. The purpose of the cookie is to determine if the user's browser supports cookies. A decision tree is a decision support tool that uses a tree-like graph or model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. test_size indicates that 70% of the data points come under training data and 30% come under testing data. Unlike random forests, the decision trees in gradient boosting are built additively; in other words, each decision tree is built one after another. Only a selected bag of features are taken into consideration, and a randomized threshold is used to create the Decision tree. MathJax reference. Firstly, from the datasets library in sklearn package, import the MNIST data. There are several terms associated with a decision tree. The output of the random forest is based on the outputs of all its decision trees. How can I use parentheses when there are math parentheses inside?

JavaScript front end for Odin Project book library database. Namely, even small changes to the training data, such as excluding a few instances, can result in a completely different tree. worry about multi-collinearity.

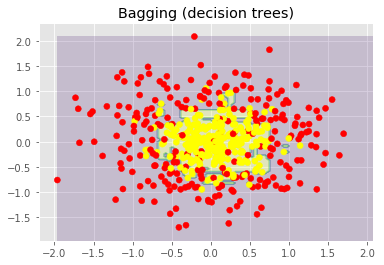

The algorithm is an extension of Bagging. Difference between Random Forests and Decision tree. But opting out of some of these cookies may affect your browsing experience. Humans can visualize and understand a tree, no matter if theyre machine learning experts or laypeople. Random forest is also an example of a supervised learning algorithm, so the presentation of these two concepts as mutually exclusive alternatives is not corect. From here on, lets understand how Random Forest is coded using the scikit-learn library in Python. Firstly, measuring the feature importance gives a better overview of what features actually affect the predictions. However, we can also weigh the predictions with the trees estimated accuracy scores so that the more precise trees get more influence. The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". Random forests use the concept of collective intelligence: An intelligence and enhanced capacity that emerges when a group of things work together. This article starts off by describing how a Decision Tree often acts as a stumbling block, and how a Random Forest classifier comes to the rescue. pd.Series is used to fetch a 1D array of int datatype. You are right that the two concepts are similar. Train the model on the training data using the RandomForestClassifier fetched from the ensemble package present in sklearn. The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. A decision tree is a classification model that formulates some specific set of rules that indicates the relations among the data points. To understand how these algorithms work, its important to know the differences between decision trees, random forests and gradient boosting. A single tree is interpretable, whereas a forest is not. The first decision tree will categorize it as an orange. Used by Google DoubleClick and stores information about how the user uses the website and any other advertisement before visiting the website.

How does one show this complex expression equals a natural number? It is popular because it is simple and easier to understand. Random forests contain multiple trees, so even if one overfits the data, that probably wont be the case with the others. The randomly split dataset is distributed among all the trees wherein each tree focuses on the data that it has been provided with. The cookie is used to store the user consent for the cookies in the category "Other. validation or test data set is going to be subset of training data

There are 4 grapes, 2 apples, and 2 oranges. Machine learning is an application of Artificial Intelligence, which gives a system the ability to learn and improve based on past experience. feature_importances_ is provided by the sklearn library as part of the RandomForestClassifier. Theres no spam, just the useful stuff that you signed up for. The random forest algorithm achieves this by averaging the predictions of the individual decision trees. set or almost overlapping instead of unexpected. You can see that if we really wanted to, we can keep adding questions to the tree to increase its complexity. A large number of trees takes an ample of time. Identification of the customers behaviors. If that doesnt make any sense, then dont worry about that for now. How do decision trees in random forests handle conflicts?

This, in turn, helps in shortlisting the important features and dropping the ones that dont make a huge impact (no impact or less impact) on the model building process. In contrast, we can also remove questions from a tree (called pruning) to make it simpler. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. As the decisions to split the nodes progress, every attribute is taken into consideration. The decision tree algorithm is a type of supervised learning algorithm. Advertisement cookies are used to provide visitors with relevant ads and marketing campaigns. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the website is doing. Moreover, the model can also get unstable due to small variations. When using a decision tree model on a given training dataset the accuracy keeps improving with more and more splits. This is used to present users with ads that are relevant to them according to the user profile. Creating an ensemble of these trees seemed like a remedy to solve the above disadvantages. Further, they are sometimes the only choice since the regulations forbid the models whose decisions the end-users cant understand. Following are the three decision trees that categorize these three fruit types. This website uses cookies to improve your experience while you navigate through the website. However, this simplicity comes with some serious disadvantages. fit() method is to fit the data by training the model on X_train and y_train. Random forest is a method that operates by constructing multiple decision trees during the training phase. This cookies is set by Youtube and is used to track the views of embedded videos. Revelation 21:5 - Behold, I am making all things new?, Short story about the creation of a spell that creates a copy of a specific woman. As I mentioned previously, each decision tree can look very different depending on the data; a random forest will randomise the construction of decision trees to try and get a variety of different predictions. Segregate the input (X) and the output (y) into train and test data using train_test_split imported from the model_selection package present under sklearn. So, even if a tree overfits its subset, we expect other trees in the forest to compensate for it. The disadvantage of using a supervised learning algorithm is that it takes longer to train than an unsupervised learning algorithm. Repeat the above 4 steps until B number of trees are generated. Difference Between Decision Tree and Random Forest, What is the Difference Between Agile and Iterative. Above decision tree classifies a set of fruits. Predict the outputs using predict() method applied on the X_test data. 1.

In essence, gradient boosting is just an ensemble of weak predictors, which are usually decision trees. This gives 97.96% as the estimated accuracy of the trained Random Forest classifier.

In addition to the hyper-parameters we need for a tree, we have to consider, the number of hyper-parameter combinations to check during. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA. Since accuracy improves with each internal node, training will tend to grow a tree to its maximum to improve the performance metrics. However, these trees are not being added without purpose. In this tutorial, well show the difference between decision trees and random forests. Hopefully, this post can clarify some of the differences between these algorithms. Additionally, its structure can change significantly even if the training data undergo a negligible modification. This, in theory, should be closer to the true result that were looking for via collective intelligence. Check for the accuracy using accuracy_score method imported from metrics package present in sklearn.

The cookie is used to store the user consent for the cookies in the category "Analytics". Default hyperparameters used to give a good prediction. Random forest will reduce variance part of error This means that it requires a training dataset in order to learn how to make predictions. At every step, it uses some technique to find the optimal split. In addition to the hyper-parameters we need for a tree, we have to consider the number of trees in our forest, . In a Random Forest classifier, several factors need to be considered to interpret the patterns among the data points. Now I know what youre thinking: This decision tree is barely a tree. The second decision tree will categorize it as a cherry while the third decision tree will categorize it as an orange. Step 5: Visualizing the feature importance. How would electric weapons used by mermaids function, if feasible? The difference between decision tree and random forest is that a decision tree is a graph that uses a branching method to illustrate every possible outcome of a decision while a random forest is a set of decision trees that gives the final outcome based on the outputs of all its decision trees. Thus, this decision tree classifies an apple, grape or orange with 100% accuracy. A random forest gives more accurate results than a decision tree. We want to predict if a day is suitable for playing outside. Lets look into the inner details about the working of a Random Forest, and then code the same in Python using the scikit-learn library. Do weekend days count as part of a vacation? This seems like a less-biased and most reliable output and is the typical Random Forest approach. Yet, Decision Tree is computationally faster in comparison to Random Forest because of the ease in generating rules. Assume there is a set of fruits (cherries, apples, and oranges). Overall, gradient boosting usually performs better than random forests but theyre prone to overfitting; to avoid this, we need to remember to tune the parameters carefully. Although a forest seems to be a more accurate model than a tree, the latter has a crucial advantage. Thanks for contributing an answer to Cross Validated! It does not require a lot of data preparation. Popular algorithms like XGBoost and CatBoost are good examples of using the gradient boosting framework. Asking for help, clarification, or responding to other answers. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. So, we expect an ensemble to be more accurate than a single tree and, therefore, more likely to yield the correct prediction. So again, we follow the path of No and go to the final question: Do I have enough disposable income to buy a new phone? If you have a few years of experience in Computer Science or research, and youre interested in sharing that experience with the community, have a look at our Contribution Guidelines. Each leaf contains a subset of the training dataset. 1. The advantage of using a supervised learning algorithm is that it can learn complex patterns in the data. Random Forest classifier is used in several applications spanning across different sectors like banking, medicine, e-commerce, etc. In supervised learning, the algorithm is trained with labeled data that guides you through the training process. Thats not the case with forests. Why did the gate before Minas Tirith break so very easily? What is a Random Forest Definition, Functionality, Examples 3. This can tell us the number of trees based on the k value. To prove the same, observe the shapes of X and y wherein the data and target are stored. Overall, the random forest provides accurate results on a larger dataset. This cookie is set by GDPR Cookie Consent plugin. It can be used to solve unsupervised ML problems. The more trees it has, the more sophisticated the algorithm is. However, the best node locally might not be the best node globally. The final decisions or the classifications are called the leaf nodes. The main one is overfitting. A decision tree is a tree shape diagram that is used to determine a course of action. In the Bagging technique, several subsets of data are created from the given dataset.

In our example, the further down the tree we went, the more specific the tree was for my scenario of deciding to buy a new phone. Humans can visualize and understand a tree, no matter if theyre machine learning experts or laypeople. Decision trees suffer from two problems. When considering all three trees, there are two outputs for orange. Theres a lot more in store, and Random Forest is one way of tackling a machine-learning solvable problem. This is accomplished using a variety of techniques such as Information Gain, Gini Index, etc. Decision tree and random forest are two techniques in machine learning. Other uncategorized cookies are those that are being analyzed and have not been classified into a category as yet. These datasets are segregated and trained separately.

There are a few discrepancies that can obstruct the fluent implementation of decision trees, including: To overcome such problems, Random Forest comes to the rescue.

For example, heres a tree predicting if a day is good for playing outside based on the weather conditions: The internal nodes tell us which features to check, and the leaves reveal the trees prediction. 2018, Available here. A Guide To Random Forests: Consolidating Decision Trees. It is important to split the data in such a way that the information gain becomes higher. As a result,the number of hyper-parameter combinations to check duringcross-validationgrows. MCMC sampling of decision tree space vs. random forest, Difference between Random Forest and MART. Lithmee holds a Bachelor of Science degree in Computer Systems Engineering and is reading for her Masters degree in Computer Science.  This cookie is set by GDPR Cookie Consent plugin. They contain a lot of trees, so explaining how they output the aggregated predictions is very hard, if not impossible. It allows the website owner to implement or change the website's content in real-time. Connect and share knowledge within a single location that is structured and easy to search. Difference Between Decision Tree and Random Forest Comparison of Key Differences, Decision Tree, Machine Learning, Random Forest. In the previous sections, feature importance has been mentioned as an important characteristic of the Random Forest Classifier. If we created our decision tree with a different question in the beginning, the order of the questions in the tree could look very different. Identifying the pathologies by finding out the common patterns. This was part of the Beginner Data Science series so if you enjoyed this article, you can check out my other videos on YouTube. Awesome! All rights reserved. In the next section, lets look into the differences between Decision Trees and Random Forests. As shown in the examples above, decision trees are great for providing a clear visual for making decisions. A decision tree is simpler and easier to understand, interpret and visualize than a random forest, which is comparatively more complex. Why does hashing a password result in different hashes, each time? Theyre also very easy to build computationally. X_train is the input in the training data. It tries to be perfect in order to fit all the training data accurately, and therefore learns too much about the features of the training data and reduces its ability to generalize. This cookie is set by the provider Yieldoptimizer. The cookie is used to store the user consent for the cookies in the category "Performance". The best answers are voted up and rise to the top, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site, Learn more about Stack Overflow the company. The accuracy is estimated against both the actual values (y_test) and the predicted values (y_pred). Lets compute that now. Each new tree is built to improve on the deficiencies of the previous trees and this concept is called boosting. Still, constructing a forest isnt just a matter of spending more time. A good example would be XGBoost, which has already helped win a lot of Kaggle competitions. This cookie is set by GDPR Cookie Consent plugin. How do they produce the predictions? There is a possibility of overfitting in a decision tree. We use cookies on our website to give you the most relevant experience by remembering your preferences and repeat visits. On the other hand, the noise in data can cause overfitting. Ill also send a notification when a new video is published :). Give the input and output values wherein x is given by the feature importance values, and y is the 10 most significant features out of the 64 attributes respectively. After splitting the dataset, the entropy level decreases as the unpredictability decreases. After a large number of trees are built using this method, each tree "votes" or chooses the class, and the class receiving the most votes by a simple majority is the "winner" or predicted class. Also, the overfitting problem is fixed by taking several random subsets of data and feeding them to various decision trees. Use MathJax to format equations. It can handle both numerical and categorical data. It's a forest you can build and control. validation data set, Random forest always wins in terms When categorizing based on the color, i.e., whether the fruit red is red or not, apples are classified into one side while oranges are classified to the other side. Training: For b in 1, 2, B, (B is the number of decision trees in a random forest). All its instances pass all the checks on the path from the root to the leaf. The Random Forest algorithm is one of the most popular machine learning algorithms that is used for both classification and regression. Thats why we use different subsets for each tree, to force them to approach the problem from different angles. Later, the pseudocode is broken down into various phases by simultaneously exploring the math flavor in it. They contain a lot of trees, so explaining how they output the aggregated predictions is very hard, if not impossible. Stay updated with Paperspace Blog by signing up for our newsletter. As the name suggests, random forests builds a bunch of decision trees independently. These cookies help provide information on metrics the number of visitors, bounce rate, traffic source, etc. In this article we'll learn about the following modules: Random Forest is a Supervised Machine Learning classification algorithm. While it can be used for both classification and regression, Random Forest has an edge over other algorithms in the following ways. Random Forest was first proposed by Tin Kam Ho at Bell Laboratories in 1995. Although forests address the two issues of decision trees, theres a question of complexity.

This cookie is set by GDPR Cookie Consent plugin. They contain a lot of trees, so explaining how they output the aggregated predictions is very hard, if not impossible. It allows the website owner to implement or change the website's content in real-time. Connect and share knowledge within a single location that is structured and easy to search. Difference Between Decision Tree and Random Forest Comparison of Key Differences, Decision Tree, Machine Learning, Random Forest. In the previous sections, feature importance has been mentioned as an important characteristic of the Random Forest Classifier. If we created our decision tree with a different question in the beginning, the order of the questions in the tree could look very different. Identifying the pathologies by finding out the common patterns. This was part of the Beginner Data Science series so if you enjoyed this article, you can check out my other videos on YouTube. Awesome! All rights reserved. In the next section, lets look into the differences between Decision Trees and Random Forests. As shown in the examples above, decision trees are great for providing a clear visual for making decisions. A decision tree is simpler and easier to understand, interpret and visualize than a random forest, which is comparatively more complex. Why does hashing a password result in different hashes, each time? Theyre also very easy to build computationally. X_train is the input in the training data. It tries to be perfect in order to fit all the training data accurately, and therefore learns too much about the features of the training data and reduces its ability to generalize. This cookie is set by the provider Yieldoptimizer. The cookie is used to store the user consent for the cookies in the category "Performance". The best answers are voted up and rise to the top, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site, Learn more about Stack Overflow the company. The accuracy is estimated against both the actual values (y_test) and the predicted values (y_pred). Lets compute that now. Each new tree is built to improve on the deficiencies of the previous trees and this concept is called boosting. Still, constructing a forest isnt just a matter of spending more time. A good example would be XGBoost, which has already helped win a lot of Kaggle competitions. This cookie is set by GDPR Cookie Consent plugin. How do they produce the predictions? There is a possibility of overfitting in a decision tree. We use cookies on our website to give you the most relevant experience by remembering your preferences and repeat visits. On the other hand, the noise in data can cause overfitting. Ill also send a notification when a new video is published :). Give the input and output values wherein x is given by the feature importance values, and y is the 10 most significant features out of the 64 attributes respectively. After splitting the dataset, the entropy level decreases as the unpredictability decreases. After a large number of trees are built using this method, each tree "votes" or chooses the class, and the class receiving the most votes by a simple majority is the "winner" or predicted class. Also, the overfitting problem is fixed by taking several random subsets of data and feeding them to various decision trees. Use MathJax to format equations. It can handle both numerical and categorical data. It's a forest you can build and control. validation data set, Random forest always wins in terms When categorizing based on the color, i.e., whether the fruit red is red or not, apples are classified into one side while oranges are classified to the other side. Training: For b in 1, 2, B, (B is the number of decision trees in a random forest). All its instances pass all the checks on the path from the root to the leaf. The Random Forest algorithm is one of the most popular machine learning algorithms that is used for both classification and regression. Thats why we use different subsets for each tree, to force them to approach the problem from different angles. Later, the pseudocode is broken down into various phases by simultaneously exploring the math flavor in it. They contain a lot of trees, so explaining how they output the aggregated predictions is very hard, if not impossible. Stay updated with Paperspace Blog by signing up for our newsletter. As the name suggests, random forests builds a bunch of decision trees independently. These cookies help provide information on metrics the number of visitors, bounce rate, traffic source, etc. In this article we'll learn about the following modules: Random Forest is a Supervised Machine Learning classification algorithm. While it can be used for both classification and regression, Random Forest has an edge over other algorithms in the following ways. Random Forest was first proposed by Tin Kam Ho at Bell Laboratories in 1995. Although forests address the two issues of decision trees, theres a question of complexity.