So Lets see how to install pyspark with Docker. 3. at org.apache.spark.deploy.history.FsHistoryProvider.startPolling(FsHistoryProvider.scala:270). If you wanted to use a different version of Spark & Hadoop, select the one you wanted from drop-downs, and the link on point 3 changes to the selected version and provides you with an updated link to download. Step-9: Add the path to the system variable. In my future posts, I will write more about How to use Docker for Data science. References. By default JAVA will be installed in the. And to remove the container permanently run this command. There will be another compressed directory in the tar format, again extract the '.tar' to the same path itself. For my case , it looks like below once I set-up the environment variables . Even if you are not working with Hadoop (or only using Spark for local development), Windows still needs Hadoop to initialize Hive context, otherwise Java will throw java.io.IOException. All you need is Spark; follow the below steps to install PySpark on windows. Copy the Docker Pull command and then run it in windows PowerShell or Git bash. Step-8: Next, type the following commands in the terminal. I would recommend using Anaconda as its popular and used by the Machine Learning & Data science community. If you are already using one, as long as it is Python 3 or higher development environment, you are covered.

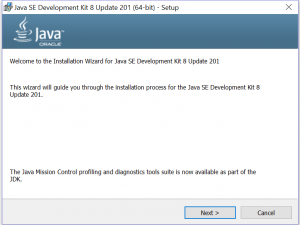

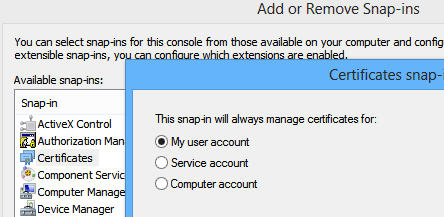

Make sure PyCharm setup with Python is done and validated by running Hello World program. Download winutils.exe by clicking on the link, Place the downloaded winutil.exe file into bin Most notably, the COVID-19 pandemic and the need for, How to install Spark (PySpark) on Windows. Once after JDK's successful installation in your machine, JAVA_HOME = c:\Program Files\Java\jdk_ 21/09/16 13:55:00 INFO SecurityManager: Changing modify acls to: sindhu Caused by: java.io.FileNotFoundException: File file:/tmp/spark-events does not exist We get following messages in the console after runningbin\pysparkcommand. Download winutils.exe file fromwinutils, and copy it to %SPARK_HOME%\bin folder. so there is no PySpark library to download. I have been trying to install Pyspark on my windows laptop for the past 3 days. So what works on one machine does not guarantees that it will also work on other machines. This should start the PySpark shell which can be used to interactively work with Spark. Go to the Sparkdownload Now let us test the if our installation was successful using Test1 and Test 2 as below. Copyright 2021 gankrin.org | All Rights Reserved | DO NOT COPY information. For example if you want to troubleshoot any issues related to Python, you can go to Programming Languages | Python choose that Category and create the topic with meaningful title. Post-installation set JAVA_HOME and PATH variable. Run the following code if it runs successfully that means PySpark is installed. 1. I have installed in windows 10 . If done , then follow all steps from 4 , and then execute pyspark as shown below. at org.apache.spark.deploy.history.FsHistoryProvider.initialize(FsHistoryProvider.scala:228) Spark SQL supports Apache Hive using HiveContext. 21/09/16 13:55:00 INFO Utils: Successfully started service on port 18080. Download your system compatible version 2.1.9 for Windows fromEnthought Canopy. Please install Anaconda with which you all the necessary packages will be installed. # spark.eventLog.enabled true In this article, I will explain how to install and run PySpark on windows and also explain how to start a history server and monitor your jobs using Web UI. JavaTpoint offers too many high quality services. As a next step , you can also run spark jobs using spark-submit. So if you correctly reached this point , that means your Spark environment is Ready in Windows. . This is whatDocker is trying to solve. How To Set up Apache Spark & PySpark in Windows 10, install pyspark on windows 10, install spark on windows 10, apache spark download, pyspark tutorial, install spark and pyspark on windows, download winutils.exe for spark 64 bit, pyspark is not recognized as an internal or external command, operable program or batch file, spark installation on windows 7, install pyspark on windows 10, install spark on windows 10, apache spark download, pyspark tutorial, install spark and pyspark on windows, download winutils.exe for spark 64 bit, pip install pyspark, pip install pyspark windows, install apache spark on windows, install pyspark on windows 10, spark installation on windows 7, download winutils.exe for spark 64 bit, winutils.exe download apache spark windows installer, install spark, how to install spark and scala on windows, spark, Sample Code for PySpark Cassandra Application, Best Practices for Dependency Problem in Spark, ( Python ) Handle Errors and Exceptions, ( Kerberos ) Install & Configure Server\Client. Once account creation is done, you can login. before you start, first you need to set the below config onspark-defaults.conf. To find the Spark package and Java SDK, add the following lines to your .bash_profile. First, go to the https://www.docker.com/ website and create a account. # spark.eventLog.dir C://logs/path From now on, we shall refer to this folder asSPARK_HOMEin thisdocument. PySpark is a Spark library written in Python to run Python applications using Apache Spark capabilities. I tried almost every method that has been given in various blog posts on the internet but nothing seems to work. (If you have pre-installed Python 2.7 version, it may conflict with the new installations by the development environment for python 3). Upon selecting Python3, a new notebook would open which we can use to run spark and use pyspark. 21/09/16 13:55:00 INFO SecurityManager: Changing view acls groups to: And Create New or Edit if already available. The methods that are described by many articles have worked on some machine but does not have worked on many other machines because we all have different hardware and software configuration. DownloadHadoop 2.7 winutils.exe. Once installed, you will see a screen like this. Setting up Google chrome is recommended if you do not have. Run source ~/.bash_profile to open a new terminal to auto-source this file. Spark supports a number of programming languages including Java, Python, Scala, and R. In this tutorial, we will set up Spark with Python Development Environment by making use of Spark Python API (PySpark) which exposes the Spark programming model to Python. Copyrights 2020 All Rights Reserved by Crayon Data. Let us see how we can configure environment variables of Spark. In this tutorial, we will discuss the PySpark installation on various operating systems. 4. Download anaconda from the provided link and install. 3. > Go to Spark bin folder and copy the bin path C:\Spark\spark-2.2.1-bin-hadoop2.7\bin, > type in cd C:\Spark\spark-2.2.1-bin-hadoop2.7\bin. ). However, copy of the whole content is again strictly prohibited. Click the link next toDownload Sparkto download the spark-2.4.0-bin-hadoop2.7.tgz Install Python Development Environment, Enthought canopyis one of the Python Development Environments just like Anaconda. Please note that, any duplicacy of content, images or any kind of copyrighted products/services are strictly prohibited. Also set of plugins are bundled together as part of enterprise edition. That way you dont have to changeHADOOP_HOMEifSPARK_HOMEisupdated. Muhammad Imran is a regular content contributor at Folio3.Ai, In this growing technological era, I love to be updated as a techy person. Now, inside the new directoryc:\spark, go toconfdirectory and rename thelog4j.properties.templatefile tolog4j.properties.

21/09/16 13:55:00 INFO SecurityManager: Changing modify acls to: sindhu Caused by: java.io.FileNotFoundException: File file:/tmp/spark-events does not exist We get following messages in the console after runningbin\pysparkcommand. Download winutils.exe file fromwinutils, and copy it to %SPARK_HOME%\bin folder. so there is no PySpark library to download. I have been trying to install Pyspark on my windows laptop for the past 3 days. So what works on one machine does not guarantees that it will also work on other machines. This should start the PySpark shell which can be used to interactively work with Spark. Go to the Sparkdownload Now let us test the if our installation was successful using Test1 and Test 2 as below. Copyright 2021 gankrin.org | All Rights Reserved | DO NOT COPY information. For example if you want to troubleshoot any issues related to Python, you can go to Programming Languages | Python choose that Category and create the topic with meaningful title. Post-installation set JAVA_HOME and PATH variable. Run the following code if it runs successfully that means PySpark is installed. 1. I have installed in windows 10 . If done , then follow all steps from 4 , and then execute pyspark as shown below. at org.apache.spark.deploy.history.FsHistoryProvider.initialize(FsHistoryProvider.scala:228) Spark SQL supports Apache Hive using HiveContext. 21/09/16 13:55:00 INFO Utils: Successfully started service on port 18080. Download your system compatible version 2.1.9 for Windows fromEnthought Canopy. Please install Anaconda with which you all the necessary packages will be installed. # spark.eventLog.enabled true In this article, I will explain how to install and run PySpark on windows and also explain how to start a history server and monitor your jobs using Web UI. JavaTpoint offers too many high quality services. As a next step , you can also run spark jobs using spark-submit. So if you correctly reached this point , that means your Spark environment is Ready in Windows. . This is whatDocker is trying to solve. How To Set up Apache Spark & PySpark in Windows 10, install pyspark on windows 10, install spark on windows 10, apache spark download, pyspark tutorial, install spark and pyspark on windows, download winutils.exe for spark 64 bit, pyspark is not recognized as an internal or external command, operable program or batch file, spark installation on windows 7, install pyspark on windows 10, install spark on windows 10, apache spark download, pyspark tutorial, install spark and pyspark on windows, download winutils.exe for spark 64 bit, pip install pyspark, pip install pyspark windows, install apache spark on windows, install pyspark on windows 10, spark installation on windows 7, download winutils.exe for spark 64 bit, winutils.exe download apache spark windows installer, install spark, how to install spark and scala on windows, spark, Sample Code for PySpark Cassandra Application, Best Practices for Dependency Problem in Spark, ( Python ) Handle Errors and Exceptions, ( Kerberos ) Install & Configure Server\Client. Once account creation is done, you can login. before you start, first you need to set the below config onspark-defaults.conf. To find the Spark package and Java SDK, add the following lines to your .bash_profile. First, go to the https://www.docker.com/ website and create a account. # spark.eventLog.dir C://logs/path From now on, we shall refer to this folder asSPARK_HOMEin thisdocument. PySpark is a Spark library written in Python to run Python applications using Apache Spark capabilities. I tried almost every method that has been given in various blog posts on the internet but nothing seems to work. (If you have pre-installed Python 2.7 version, it may conflict with the new installations by the development environment for python 3). Upon selecting Python3, a new notebook would open which we can use to run spark and use pyspark. 21/09/16 13:55:00 INFO SecurityManager: Changing view acls groups to: And Create New or Edit if already available. The methods that are described by many articles have worked on some machine but does not have worked on many other machines because we all have different hardware and software configuration. DownloadHadoop 2.7 winutils.exe. Once installed, you will see a screen like this. Setting up Google chrome is recommended if you do not have. Run source ~/.bash_profile to open a new terminal to auto-source this file. Spark supports a number of programming languages including Java, Python, Scala, and R. In this tutorial, we will set up Spark with Python Development Environment by making use of Spark Python API (PySpark) which exposes the Spark programming model to Python. Copyrights 2020 All Rights Reserved by Crayon Data. Let us see how we can configure environment variables of Spark. In this tutorial, we will discuss the PySpark installation on various operating systems. 4. Download anaconda from the provided link and install. 3. > Go to Spark bin folder and copy the bin path C:\Spark\spark-2.2.1-bin-hadoop2.7\bin, > type in cd C:\Spark\spark-2.2.1-bin-hadoop2.7\bin. ). However, copy of the whole content is again strictly prohibited. Click the link next toDownload Sparkto download the spark-2.4.0-bin-hadoop2.7.tgz Install Python Development Environment, Enthought canopyis one of the Python Development Environments just like Anaconda. Please note that, any duplicacy of content, images or any kind of copyrighted products/services are strictly prohibited. Also set of plugins are bundled together as part of enterprise edition. That way you dont have to changeHADOOP_HOMEifSPARK_HOMEisupdated. Muhammad Imran is a regular content contributor at Folio3.Ai, In this growing technological era, I love to be updated as a techy person. Now, inside the new directoryc:\spark, go toconfdirectory and rename thelog4j.properties.templatefile tolog4j.properties.

Typically it is like C:\Program Files\Java\jdk1.8.0_191. In this very first chapter, we will, make a complete end-to-end setup of Apache Spark on Windows. You can try and let us know if you are facing any specific issues. Type the following command in the terminal to check the version of Java in your system. PySpark requires Java version 7 or later and Python version 2.6 or later. Now set the following environment variables. Copy the path and add it to the path variable. History servers, keep a log of all PySpark applications you submit by spark-submit, pyspark shell. 3.

Spark runs on top of Scala and Scala requires Java Virtual Machine to execute. We will develop a program as part of next section to validate. Now Run pyspark command, and it will display the following window: We will learn about the basic functionalities of PySpark in the further tutorial. Lets download thewinutils.exeand configure our Spark installation to findwinutils.exe. 2020 www.learntospark.com, All rights are reservered, How to Install Apache Spark on Windows | Setup PySpark in Anaconda, Apache Spark is a powerful framework that does in-memory computation and parallel execution of task with Scala, Python and R interfaces, that provides an API integration to process massive distributed processing over resilient sets of data. Let us create a program to use sys.arguments. To check if Java is available and find its version, open a Command Prompt and type the followingcommand. It should be 64 bit for our environment, Open the File explorer and right click on This PC to get the following details, Once the above details are confirmed we can go further. However, it doesnt support Spark development implicitly. Using Sparks default log4j profile: org/apache/spark/log4j-defaults.properties Inside theCompatibilitytab, ensureRun as Administratoris checked. Lets assume Java is installed . Finally, collect action is called on the new RDD to return a classic Python list. at org.apache.spark.deploy.history.HistoryServer$.main(HistoryServer.scala:303) To check if Python is available, open a Command Prompt and type the followingcommand. Follow the below steps to Install PySpark on Windows. Run the executable file downloaded to install JAVA JDK. Create a new jupyter notebook. After the installation is complete, close the Command Prompt if it was already open, open it and check if you can successfully runpython versioncommand.