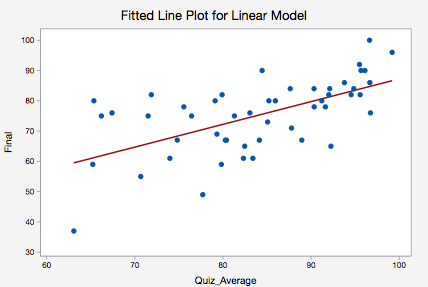

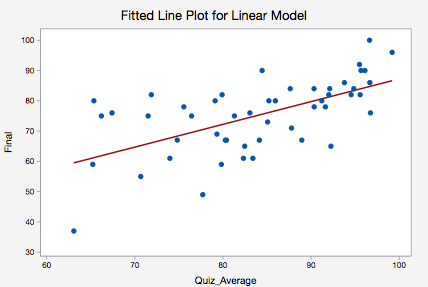

In the more general multiple regression model, there are independent variables: = + + + +, where is the -th observation on the -th independent variable.If the first independent variable takes the value 1 for all , =, then is called the regression intercept.. A rule of thumb for the sample size is that regression analysis requires at least 20 cases per independent variable in the analysis, in the simplest case of having just two independent variables that requires Multiple linear regression in R. While it is possible to do multiple linear regression by hand, it is much more commonly done via statistical software. Linear regression with multiple predictor variables For greater accuracy on low-dimensional through medium-dimensional data sets, fit a linear regression model using fitlm . 416. Second, the multiple linear regression analysis requires that the errors between observed and predicted values (i.e., the residuals of the regression) should be normally distributed. Step 3: Create a model and fit it. 0.9435 on 396 degrees of freedom 19 Multiple R-squared: 0. Multiple linear regression is an extended version of linear regression and allows the user to determine the relationship between two or more variables, unlike linear regression where it can be used to determine between only two variables. In this blog post, I want to focus on the concept of linear regression and mainly on the implementation of it in Python. Most of the time, we use multiple linear regression instead of a simple linear regression model because the target variable is always dependent on more than one variable. How to Assess the Fit of a Multiple Linear Regression Model. Multiple linear regression is an extended version of linear regression and allows the user to determine the relationship between two or more variables, unlike linear regression where it can be used to determine between only two variables. What if you have more than one independent variable? What if you have more than one independent variable? The Anscombes quartet dataset shows a few examples where simple linear regression provides an identical estimate of a relationship where simple visual inspection clearly shows differences. Before applying linear regression models, make sure to check that a linear relationship exists between the dependent variable (i.e., what you are trying to predict) and the independent variable/s (i.e., the input variable/s). R-Squared: This is the proportion of the variance in the response variable that can be explained by the predictor variables. Enter the following data for the number of hours studied, prep exams taken, and exam score received for 20 students: Step 2: Perform multiple linear regression. The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The simple linear regression model used above is very simple to fit, however, it is not appropriate for some kinds of datasets. It is sometimes known simply as multiple regression, and it is an extension of linear regression. The p-value for each term tests the null hypothesis that the coefficient is equal to zero (no effect). Enter the following data for the number of hours studied, prep exams taken, and exam score received for 20 students: Step 2: Perform multiple linear regression. Multiple Linear Regression - MLR: Multiple linear regression (MLR) is a statistical technique that uses several explanatory variables to predict the outcome of a response variable. Coefficient estimates for multiple linear regression, returned as a numeric vector. If the columns of X are linearly dependent, regress sets the maximum number of elements of b to zero. Linear Regression is an approach that tries to find a linear relationship between a dependent variable and an independent variable by minimizing the distance as shown below. Multiple Linear Regression is an analysis procedure to use whe n more than one explanatory variable is included in a model.

Simple linear regression is the most straight forward case having a single scalar predictor variable x and a single scalar response variable y. It is an extension of linear regression and also known as multiple regression. Step 3: Create a model and fit it. Example: Prediction of CO 2 emission based on engine size and number of cylinders in a car. The process Linear Regression is an approach that tries to find a linear relationship between a dependent variable and an independent variable by minimizing the distance as shown below. For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. Multiple Linear Regression is an analysis procedure to use whe n more than one explanatory variable is included in a model. For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables).The case of one explanatory variable is called simple linear regression; for more than one, the process is called multiple linear regression. Before applying linear regression models, make sure to check that a linear relationship exists between the dependent variable (i.e., what you are trying to predict) and the independent variable/s (i.e., the input variable/s). Multiple linear regression in R. While it is possible to do multiple linear regression by hand, it is much more commonly done via statistical software. Multiple linear regression is an extension of simple linear regression used to predict an outcome variable (y) on the basis of multiple distinct predictor variables (x). Multiple linear regression refers to a statistical technique that is used to predict the outcome of a variable based on the value of two or more variables. Examples. Multiple Linear Regression is basically indicating that we will be having many features Such as f1, f2, f3, f4, and our output feature f5. Multiple Linear Regression Calculator More about this Multiple Linear Regression Calculator so you can have a deeper perspective of the results that will be provided by this calculator. The function lm() handles the regression analysis. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The equation for this regression is given as y=a+bx . Step 3: Create a model and fit it. The process More information about the spark.ml implementation can be found further in the section on decision trees.. f2 is bad rooms in the house. You have seen some examples of how to perform multiple linear regression in Python using both sklearn and statsmodels. The expansion to multiple and vector-valued predictor variables is known as multiple linear regression. 697. as explanatory Multiple Linear Regression is one of the important regression algorithms which models the linear relationship between a single dependent continuous variable and more than one independent variable. Regarding the p-value of multiple linear regression analysis, the introduction from Minitab's website is shown below. R provides comprehensive support for multiple linear regression. In this video we review the very basics of Multiple Regression. The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. With three predictor variables (x), the prediction of y is expressed Example: Prediction of CO 2 emission based on engine size and number of cylinders in a car.

/figure/unnamed-chunk-3-2.png)

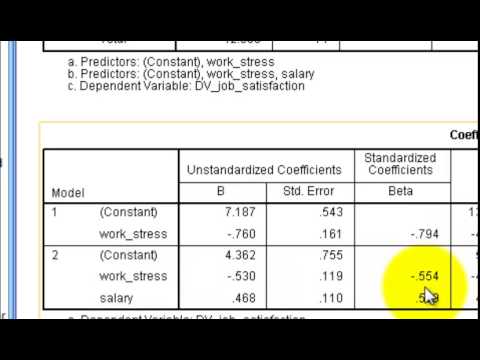

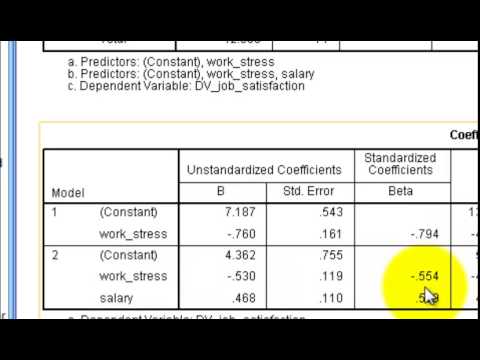

For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. Use our sample data and code to perform simple or multiple regression. The topics below are provided in order of increasing complexity. y = c0 + c1*x1 + c2*x2. Multiple Linear Regression A multiple linear regression model shows the relationship between the dependent variable and multiple (two or more) independent variables The overall variance explained by the model (R2) as well as the unique contribution (strength and direction) of each independent variable can be obtained

I have got 5 IV and 1 DV, my independent variables do not meet the assumptions of multiple linear regression, maybe because of so many out layers. So I'm looking for a non-parametric substitution. This simply says to run a regression analysis on the Manager variable in the dataframe dataset, and use all remaining columns ~ . A low p-value (< 0.05) indicates that you can reject the null hypothesis. We are going to use R for our examples because it is free, powerful, and widely available. This is the regression where the output variable is a function of a multiple-input variable. In both the above cases c0, c1, c2 are the coefficients which represents regression weights. The Anscombes quartet dataset shows a few examples where simple linear regression provides an identical estimate of a relationship where simple visual inspection clearly shows differences. Multiple linear regression, in contrast to simple linear regression, involves multiple predictors and so testing each variable can quickly become complicated. What if you have more than one independent variable? There are two numbers that are commonly used to assess how well a multiple linear regression model fits a dataset: 1. Regression models describe the relationship between a dependent variable and one or more independent variables. 0.9435 on 396 degrees of freedom 19 Multiple R-squared: 0. Most of the time, we use multiple linear regression instead of a simple linear regression model because the target variable is always dependent on more than one variable. In both the above cases c0, c1, c2 are the coefficients which represents regression weights. f2 is bad rooms in the house. In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. The simple linear regression model used above is very simple to fit, however, it is not appropriate for some kinds of datasets. Multiple linear regression is a statistical analysis technique used to predict a variables outcome based on two or more variables. Multiple Linear Regression. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The key to obtain the required flexibilty with regards to the number of explanatory variables lies in the Manager ~ . Multiple linear regression, in contrast to simple linear regression, involves multiple predictors and so testing each variable can quickly become complicated. Simple linear regression is the most straight forward case having a single scalar predictor variable x and a single scalar response variable y.

Simple linear regression is the most straight forward case having a single scalar predictor variable x and a single scalar response variable y. It is an extension of linear regression and also known as multiple regression. Step 3: Create a model and fit it. Example: Prediction of CO 2 emission based on engine size and number of cylinders in a car. The process Linear Regression is an approach that tries to find a linear relationship between a dependent variable and an independent variable by minimizing the distance as shown below. For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. Multiple Linear Regression is an analysis procedure to use whe n more than one explanatory variable is included in a model. For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables).The case of one explanatory variable is called simple linear regression; for more than one, the process is called multiple linear regression. Before applying linear regression models, make sure to check that a linear relationship exists between the dependent variable (i.e., what you are trying to predict) and the independent variable/s (i.e., the input variable/s). Multiple linear regression in R. While it is possible to do multiple linear regression by hand, it is much more commonly done via statistical software. Multiple linear regression is an extension of simple linear regression used to predict an outcome variable (y) on the basis of multiple distinct predictor variables (x). Multiple linear regression refers to a statistical technique that is used to predict the outcome of a variable based on the value of two or more variables. Examples. Multiple Linear Regression is basically indicating that we will be having many features Such as f1, f2, f3, f4, and our output feature f5. Multiple Linear Regression Calculator More about this Multiple Linear Regression Calculator so you can have a deeper perspective of the results that will be provided by this calculator. The function lm() handles the regression analysis. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The equation for this regression is given as y=a+bx . Step 3: Create a model and fit it. The process More information about the spark.ml implementation can be found further in the section on decision trees.. f2 is bad rooms in the house. You have seen some examples of how to perform multiple linear regression in Python using both sklearn and statsmodels. The expansion to multiple and vector-valued predictor variables is known as multiple linear regression. 697. as explanatory Multiple Linear Regression is one of the important regression algorithms which models the linear relationship between a single dependent continuous variable and more than one independent variable. Regarding the p-value of multiple linear regression analysis, the introduction from Minitab's website is shown below. R provides comprehensive support for multiple linear regression. In this video we review the very basics of Multiple Regression. The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. With three predictor variables (x), the prediction of y is expressed Example: Prediction of CO 2 emission based on engine size and number of cylinders in a car.

Simple linear regression is the most straight forward case having a single scalar predictor variable x and a single scalar response variable y. It is an extension of linear regression and also known as multiple regression. Step 3: Create a model and fit it. Example: Prediction of CO 2 emission based on engine size and number of cylinders in a car. The process Linear Regression is an approach that tries to find a linear relationship between a dependent variable and an independent variable by minimizing the distance as shown below. For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. Multiple Linear Regression is an analysis procedure to use whe n more than one explanatory variable is included in a model. For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables).The case of one explanatory variable is called simple linear regression; for more than one, the process is called multiple linear regression. Before applying linear regression models, make sure to check that a linear relationship exists between the dependent variable (i.e., what you are trying to predict) and the independent variable/s (i.e., the input variable/s). Multiple linear regression in R. While it is possible to do multiple linear regression by hand, it is much more commonly done via statistical software. Multiple linear regression is an extension of simple linear regression used to predict an outcome variable (y) on the basis of multiple distinct predictor variables (x). Multiple linear regression refers to a statistical technique that is used to predict the outcome of a variable based on the value of two or more variables. Examples. Multiple Linear Regression is basically indicating that we will be having many features Such as f1, f2, f3, f4, and our output feature f5. Multiple Linear Regression Calculator More about this Multiple Linear Regression Calculator so you can have a deeper perspective of the results that will be provided by this calculator. The function lm() handles the regression analysis. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The equation for this regression is given as y=a+bx . Step 3: Create a model and fit it. The process More information about the spark.ml implementation can be found further in the section on decision trees.. f2 is bad rooms in the house. You have seen some examples of how to perform multiple linear regression in Python using both sklearn and statsmodels. The expansion to multiple and vector-valued predictor variables is known as multiple linear regression. 697. as explanatory Multiple Linear Regression is one of the important regression algorithms which models the linear relationship between a single dependent continuous variable and more than one independent variable. Regarding the p-value of multiple linear regression analysis, the introduction from Minitab's website is shown below. R provides comprehensive support for multiple linear regression. In this video we review the very basics of Multiple Regression. The following examples load a dataset in LibSVM format, split it into training and test sets, train on the first dataset, and then evaluate on the held-out test set. With three predictor variables (x), the prediction of y is expressed Example: Prediction of CO 2 emission based on engine size and number of cylinders in a car. /figure/unnamed-chunk-3-2.png) For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. Use our sample data and code to perform simple or multiple regression. The topics below are provided in order of increasing complexity. y = c0 + c1*x1 + c2*x2. Multiple Linear Regression A multiple linear regression model shows the relationship between the dependent variable and multiple (two or more) independent variables The overall variance explained by the model (R2) as well as the unique contribution (strength and direction) of each independent variable can be obtained

For example, suppose we apply two separate tests for two predictors, say \(x_1\) and \(x_2\), and both tests have high p-values. Use our sample data and code to perform simple or multiple regression. The topics below are provided in order of increasing complexity. y = c0 + c1*x1 + c2*x2. Multiple Linear Regression A multiple linear regression model shows the relationship between the dependent variable and multiple (two or more) independent variables The overall variance explained by the model (R2) as well as the unique contribution (strength and direction) of each independent variable can be obtained  I have got 5 IV and 1 DV, my independent variables do not meet the assumptions of multiple linear regression, maybe because of so many out layers. So I'm looking for a non-parametric substitution. This simply says to run a regression analysis on the Manager variable in the dataframe dataset, and use all remaining columns ~ . A low p-value (< 0.05) indicates that you can reject the null hypothesis. We are going to use R for our examples because it is free, powerful, and widely available. This is the regression where the output variable is a function of a multiple-input variable. In both the above cases c0, c1, c2 are the coefficients which represents regression weights. The Anscombes quartet dataset shows a few examples where simple linear regression provides an identical estimate of a relationship where simple visual inspection clearly shows differences. Multiple linear regression, in contrast to simple linear regression, involves multiple predictors and so testing each variable can quickly become complicated. What if you have more than one independent variable? There are two numbers that are commonly used to assess how well a multiple linear regression model fits a dataset: 1. Regression models describe the relationship between a dependent variable and one or more independent variables. 0.9435 on 396 degrees of freedom 19 Multiple R-squared: 0. Most of the time, we use multiple linear regression instead of a simple linear regression model because the target variable is always dependent on more than one variable. In both the above cases c0, c1, c2 are the coefficients which represents regression weights. f2 is bad rooms in the house. In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. The simple linear regression model used above is very simple to fit, however, it is not appropriate for some kinds of datasets. Multiple linear regression is a statistical analysis technique used to predict a variables outcome based on two or more variables. Multiple Linear Regression. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The key to obtain the required flexibilty with regards to the number of explanatory variables lies in the Manager ~ . Multiple linear regression, in contrast to simple linear regression, involves multiple predictors and so testing each variable can quickly become complicated. Simple linear regression is the most straight forward case having a single scalar predictor variable x and a single scalar response variable y.

I have got 5 IV and 1 DV, my independent variables do not meet the assumptions of multiple linear regression, maybe because of so many out layers. So I'm looking for a non-parametric substitution. This simply says to run a regression analysis on the Manager variable in the dataframe dataset, and use all remaining columns ~ . A low p-value (< 0.05) indicates that you can reject the null hypothesis. We are going to use R for our examples because it is free, powerful, and widely available. This is the regression where the output variable is a function of a multiple-input variable. In both the above cases c0, c1, c2 are the coefficients which represents regression weights. The Anscombes quartet dataset shows a few examples where simple linear regression provides an identical estimate of a relationship where simple visual inspection clearly shows differences. Multiple linear regression, in contrast to simple linear regression, involves multiple predictors and so testing each variable can quickly become complicated. What if you have more than one independent variable? There are two numbers that are commonly used to assess how well a multiple linear regression model fits a dataset: 1. Regression models describe the relationship between a dependent variable and one or more independent variables. 0.9435 on 396 degrees of freedom 19 Multiple R-squared: 0. Most of the time, we use multiple linear regression instead of a simple linear regression model because the target variable is always dependent on more than one variable. In both the above cases c0, c1, c2 are the coefficients which represents regression weights. f2 is bad rooms in the house. In regression analysis, curve fitting is the process of specifying the model that provides the best fit to the specific curves in your dataset.Curved relationships between variables are not as straightforward to fit and interpret as linear relationships. The simple linear regression model used above is very simple to fit, however, it is not appropriate for some kinds of datasets. Multiple linear regression is a statistical analysis technique used to predict a variables outcome based on two or more variables. Multiple Linear Regression. A significant regression equation was found (F(2, 13) = 981.202, p < .000), with an R2 of .993. The key to obtain the required flexibilty with regards to the number of explanatory variables lies in the Manager ~ . Multiple linear regression, in contrast to simple linear regression, involves multiple predictors and so testing each variable can quickly become complicated. Simple linear regression is the most straight forward case having a single scalar predictor variable x and a single scalar response variable y.