So, it is revealed that decision will always be yes if wind were weak and outlook were rain. Entropy(Decision|Wind=Strong) ] = 0.940 [ (8/14) . 1] = 0.048. You can find the dataset and more information about the variables in the dataset on Analytics Vidhya. The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. If you wonder how to compute this equation, please read this post. The root node feature is selected based on the results from the Attribute Selection Measure(ASM). Established in Pittsburgh, Pennsylvania, USTowards AI Co. is the worlds leading AI and technology publication focused on diversity, equity, and inclusion. thanks. log2p(Yes), 2- Entropy(Decision|Wind=Strong) = (3/6) . This process is known as attribute selection.

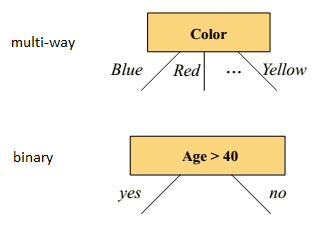

This algorithm uses either Information gain or Gain ratio to decide upon the classifying attribute. Decision tree algorithm falls under the category of supervised learning. Do you wanna know about the Decision Tree in Machine Learning?. Here, we have 3 features and 2 output classes.To build a decision tree using Information gain. Learn how your comment data is processed. As the name suggests, Decision Tree, it is a Tree-shaped structure, which determines a course of action. Iterative Dichotomiser 3 (ID3): This algorithm uses Information Gain to decide which attribute is to be used classify the current subset of the data. The ASM is repeated until a leaf node, or a terminal node cannot be split into sub-nodes. The above problem statement is taken from Analytics Vidhya Hackathon. So, I have calculated the information gain of Competition and Type attributes. Start with all training instances associated with the root node, Use info gain to choose which attribute to label each node with. We would reflect it to the formula. Thats why Yes redirect to Down Leaf Node and No redirects to Up Leaf node. Entropy= -[P/P+N log2 (P/P+N) + N/P+N log2 (N/P+N)]. In this way, the most dominant attribute can be founded. From the above images we can see that the information gain is maximum when we make a split on feature Y. Extended version of ID3 is C4.5. Thank you so much for the solution. Here, there are 5 instances for sunny outlook. It means an attribute with lower Gini index should be preferred. Metrics of feature or X is the values or attributes by which we predict or categorized. Pi= probability of an object being classified into a particular class. We will split the numerical feature where it offers the highest information gain. Please give me the python code of this algorithm, You can find it in my GitHub repo. Great tuts, weve already got the decision of yes or no.

But opting out of some of these cookies may affect your browsing experience. We use them to categorize the profit. In that database, we have to choose one variable that is Target Variable. Additionally, www.mltut.com participates in various other affiliate programs, and we sometimes get a commission through purchases made through our links. Decision is divided into two equal parts. Now, we calculate the entropy of the rest of the attributes and that are- Age, Competition, and Type. Sklearn supports Gini criteria for Gini Index and by default, it takes gini value. But still, if you have any doubt, feel free to ask me in the comment section. Please visit Sanjeevs article regarding training, development, test, and splitting of the data for detailed reasoning. www.mltut.com is a participant in the Amazon Services LLC Associates Program, an affiliate advertising program designed to provide a means for sites to earn advertising fees by advertising and linking to amazon.com. Example:Lets consider the dataset in the image below and draw a decision tree using gini index. And We are storing the file values in the dataset variable. First of all, dichotomisation means dividing into two completely opposite things. Decision Trees Explained With a Practical Example was originally published in Towards AIMultidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story. Anyone who keeps learning stays young. i hope you can find out what i mean .. and another thing is it can not predict if one of the columns is a number. We have an equal proportion for both the classes.In Gini Index, we have to choose some random values to categorize each attribute. These formulas might confuse your mind. Required fields are marked *. This website uses cookies to improve your experience while you navigate through the website. [Computer Vision Learning Path]- 2022, Udacity Self Driving Car Nanodegree Review- Is It Worth It? You also have the option to opt-out of these cookies. Here, we are calling the Panda library, therefore we write pd.read_csv. Are you ML Beginner and confused, from where to start ML, then read my BLOG How do I learn Machine Learning? The Competition attribute has two values- Yes and No. Decision Tree is supervised because here you have a labeled dataset. log2(9/14) (5/14) . This package supports the most common decision tree algorithms such as ID3, C4.5, CART, CHAID or Regression Trees, also some baggingmethods such as random forest and some boosting methods such asgradient boostingand adaboost. In this post, we have mentioned one of the most common decision tree algorithm named as ID3. Calculations for wind column is over. We can represent any boolean function on discrete attributes using the decision tree. Even though decision tree algorithms are powerful, they have long training time. I took a classification problem because we can visualize the decision tree after training, which is not possible with regression models. Wind attribute has two labels: weak and strong. Suppose we have to classify different types of fruits based on their features with the help of the Decision Tree. Thats why Decision Tree is used in two places. Entropy is the main concept of this algorithm, which helps determine a feature or attribute that gives maximum information about a class is called Information gain or ID3 algorithm. It does not store any personal data. If yes, then this blog is just for you. So, here we have to classify the fruit type into different classes. We need to calculate the entropy first.

Thank you so much. The small variation in the input data can result in a different decision tree. Thank you so much worked on 3.7.. but i tried my own dataset in which one of the columns is numeric. Anyone who stops learning is old, whether at twenty or eighty. Towards AI is the world's leading artificial intelligence (AI) and technology publication. There are 8 instances for weak wind. On the basis of attribute values records are distributed recursively. And the value which we passed in the bracket are the column value of each attribute. That means Mid is belonging to different sets of attributes. The trick is here that we will convert continuos features into categorical. Now, we have calculated the entropy of all 3 values of the Age attribute. This blog post mentions the deeply explanation of ID3 algorithm and we will solve a problem step by step. and the decision tree only took the first value of the first row and split that attribute on that value, But instead of that I have to take an average of that column and split on the average, is there any way to do that ?! In order for Towards AI to work properly, we log user data. Here is an example of BibTex entry: How Random Forests Can Keep You From Decision Tree, Logarithm of Sigmoid As a Neural Networks Activation Function. Which is a decent score for this type of problem statement? Herein, you can find the python implementation of ID3 algorithm here. We receive millions of visits per year, have several thousands of followers across social media, and thousands of subscribers. A step by step approach to solve the Decision Tree example. And with the help of a labeled dataset, the decision tree classifies the data. Decision Tree allows, to take decisions, based on the attributes. where X is metrics of features. Come write articles for us and get featured, Learn and code with the best industry experts. On the other hand, decision will always be yes if humidity were normal. We can summarize the ID3 algorithm as illustrated below, Gain(S, A) =Entropy(S) [ p(S|A) . In this dataset, there are four attributes. The feature or attribute with the highest ID3 gain is used as the root for the splitting. We use statistical methods for ordering attributes as root or the internal node. log2(3/6) (3/6) .

Now, we need to calculate (Decision|Wind=Weak) and(Decision|Wind=Strong) respectively. 1- Entropy(Decision|Wind=Strong) = p(No) . After performing this step, you will get your results. Now, we have to find the Entropy of this Target Variable.

You might have heard the term CART. Thats why I am directly taking the value of information gain of Competition and Type. So we dont need to further split the dataset. Now, we have finally calculated the information gain of Age Attribute. Well, its like we got the calculations right! acknowledge that you have read and understood our, GATE CS Original Papers and Official Keys, ISRO CS Original Papers and Official Keys, ISRO CS Syllabus for Scientist/Engineer Exam, Boosting in Machine Learning | Boosting and AdaBoost, Learning Model Building in Scikit-learn : A Python Machine Learning Library, ML | Introduction to Data in Machine Learning, Best Python libraries for Machine Learning, Linear Regression (Python Implementation). Save my name, email, and website in this browser for the next time I comment. Gain(Decision, Wind) =Entropy(Decision) [ p(Decision|Wind) . Now, its time to see how we can implement a Decision Tree in Python. We aim to publish unbiased AI and technology-related articles and be an impartial source of information. They are actually not different than the decision tree algorithm mentioned in this blog post. By using our site, you Now we know the Information Gain of all three attributes. And it can cause some issues in your Machine Learning Model. So, Lets calculate the Entropy for Target Variable (Profit)-. If the values are continuous then they are discretized prior to building the model. Yes. In the dataset above there are 5 attributes from which attribute E is the predicting feature which contains 2(Positive & Negative) classes. Here, P is the number of Down in Profit, and N is the number of Up in Profit. Finally, it means that we need to check the humidity and decide if outlook were sunny. I will do my best to clear your doubt. It is a direct improvement from the ID3 algorithm as it can handle both continuous and missing attribute values. And Y or Dependent variables are nothing but the predicted or categorized value. Thats why, the algorithm iteratively divides attributes into two groups which are the most dominant attribute and others to construct a tree. Without the Type attribute, we come to the Leaf node. Here, We get Information gain of our Target Variable as 1. Are you thinking, How I filled this Table? Out of these, the cookies that are categorized as necessary are stored on your browser as they are essential for the working of basic functionalities of the website. Its really helpful for me during my final examination time for Master of networking (Cyber Security). The both gradient boosting and adaboost are boosting techniques for decision tree based machine learning models. https://medium.com/media/3c1a896d860149107212fac47367f665/hrefhttps://medium.com/media/3c1a896d860149107212fac47367f665/href. They can use nominal attributes whereas most of common machine learning algorithms cannot. log2(2/8) (6/8) . It stands for Classification and Regression Trees. You can see it here: https://github.com/serengil/chefboost. This cookie is set by GDPR Cookie Consent plugin. Decision trees are non-linear (piere-wise actually) algorithms. Now we can see that while splitting the dataset by feature Y, the child contains pure subset of the target variable. Instead of (Outlook=Sunny|Temperature)gain, shouldnt it be Gain(Outlook=Sunny|Temperature)? And that is our goal. This cookie is set by GDPR Cookie Consent plugin. There are 9 decisions labeled yes, and 5 decisions labeled no. So in that dataset, choose Profit as a Target Variable. Still, we are able to build trees with continuous and numerical features. Decision rules will be found based on entropy and information gain pair of features. They require to run core decision tree algorithms. A clap and follow are appreciated. Below are some assumptions that we made while using decision tree: As you can see from the above image that Decision Tree works on the Sum of Product form which is also known as Disjunctive Normal Form. We will take each of the feature and calculate the information for each feature. This cookie is set by GDPR Cookie Consent plugin. Similarly Down came 2 times in Mid, and Up came 2 times in Mid. Gain(Decision, Wind) =Entropy(Decision) [ p(Decision|Wind=Weak) .

Attribute Subset Selection Measure is a technique used in the data mining process for data reduction. The Root node is the top node of a tree, from where the tree starts. Thanks alot, the explanation was helpful. It supports numerical features and uses gain ratio instead of information gain. Nice explanation.Just one doubtCan you tell me how ID3 handles continuous values of attributes?I am aware that c4.5 is the way to go if there are continuous values but I just happen to find a ID3 problem that had continuous values in the attribute.Please tell me how does ID3 handle continuous values. If you are wondering about Machine Learning, read this Blog- What is Machine Learning? Whats more, decision will be always no if wind were strong and outlook were rain. We perform feature scaling because all the variables are not on the same scale. In this column, we have graduate and not graduate values. Decision Tree Examples include Financial Decision tree, Decision-based Decision Tree, etc. The cookies is used to store the user consent for the cookies in the category "Necessary". A-143, 9th Floor, Sovereign Corporate Tower, We use cookies to ensure you have the best browsing experience on our website. Notice that if the number of instances of a class were 0 and total number of instances were n, then we need to calculate -(0/n) .

This is a special case often appears in decision tree applications. On the other hand, they tend to fall over-fitting. Here I will discuss What is Decision Tree in Machine Learning?, Decision Tree Example with Solution?. p, denotes the probability of E(S), which denotes the entropy. Besides, its evolved version C4.5 exists which can handle nominal data. i.e., URL: 304b2e42315e, Last Updated on February 23, 2021 by Editorial Team. And the values are-, Information Gain(Profit, Competition) = 0.124. After then, commons is a directory in chefboost. Now, humidity is the decision because it produces the highest score if outlook were sunny. Great step by step explanation! Nowadays, gradient boosting decision trees are very popular in machine learning community.

Here, there are 6 instances for strong wind. However, it is required to transform numeric attributes to nominal in ID3. data-set with all females in it and then, In this split the whole data-set with Married status yes, In this split the whole data-set with Married status no. So, how to calculate the information gain?. We have thousands of contributing writers from university professors, researchers, graduate students, industry experts, and enthusiasts. They also build many decision trees in the background. We also use third-party cookies that help us analyze and understand how you use this website. Firstly, It was introduced in 1986and it is acronym of Iterative Dichotomiser. And Here We Go!. Entropy(Decision|Wind) ]. They all look for the feature offering the highest information gain. Down came 3 times in Old, thats why I have written 3. After calculating the entropy of each attribute, find the. Gini Index is a metric to measure how often a randomly chosen element would be incorrectly identified. Information GainWhen we use a node in a decision tree to partition the training instances into smaller subsets the entropy changes. 1. Decision Tree is simple to understand.2. Of course, I would be happy if you share these content in a classroom. The higher the entropy more the information content.Definition: Suppose S is a set of instances, A is an attribute, Sv is the subset of S with A = v, and Values (A) is the set of all possible values of A, thenExample: Building Decision Tree using Information GainThe essentials: Example:Now, lets draw a Decision Tree for the following data using Information gain. log2p(No) p(Yes) . So, in that case, the Competition attribute has high Information Gain as compare to Type attribute. Now we have to choose our Root Node. Functional cookies help to perform certain functionalities like sharing the content of the website on social media platforms, collect feedbacks, and other third-party features. This algorithm can be used for regression and classification problemsyet, is mostly used for classification problems. p = no of positive cases(Loan_Status accepted), n = number of negative cases(Loan_Status not accepted), There are two types in this male(1) and female(0). And according to the main dataset table, all the yes value belongs to Down, and the No value belongs to Up. Here, our Target variable is Fruit Type. This can be reduced by using feature engineering techniques. The input values are preferred to be categorical. Thats why we didnt take Type attribute. Your email address will not be published. The measure of the degree of probability of a particular variable being wrongly classified when it is randomly chosen is called the Gini index or Gini impurity. Now, you have a question What is Information Gain, Entropy, and Gain? At this point, decision will always be no if humidity were high. Besides, regular decision tree algorithms are designed to create branches for categorical features. Target Variable is nothing but a final result. Entropy= -[P/P+N log2 (P/P+N) + N/P+N log2 (N/P+N)], = -[ 5/10 log2 (5/10) + 5/10 log2 (5/10) ]. Now we calculate the Information Gain of Age Attribute. I hope, you understood. Analytical cookies are used to understand how visitors interact with the website. We have two popular attribute selection measures: 1. For instance, the following table informs about decision making factors to play tennis at outside for previous 14 days. C4.5: This algorithm is the successor of the ID3 algorithm. generate link and share the link here. The Formula for the calculation of the of the Gini Index is given below. 0.811 ] [ (6/14). So, we will discuss how they are similar and how they are different in the following video. So, decision tree construction is over. Even though compilers cannot compute this operation, we can compute it with calculus. 2. Now we have to check, which attribute has high information gain. Your email address will not be published. The formula for calculating information gain is-, Information Gain(Target Variable, Attribute) = Entropy(Target Variable) Entropy( Target Variable, Attribute), So, calculate the information gain of Age Attribute-, Information Gain(Profit, Age) = Entropy(Profit)- Entropy(Profit, Age). Thats why Choose Competition as the next node. Herein, ID3 is one of the most common decision tree algorithm. The final tree for the above dataset would be look like this:2. Here, you can find a tutorial deeply explained. Here, wind produces the highest score if outlook were rain. I do not understand your problem actually. 1- Gain(Outlook=Sunny|Temperature) = 0.570. The both random forest and gradient boosting are an approach instead of a core decision tree algorithm itself. Recursively construct each subtree on the subset of training instances that would be classified down that path in the tree. DeepFace is the best facial recognition library for Python. How Decision Tree in Machine Learning works? https://medium.com/media/402258082637e4b1a0f4ac37fb40ebb1/hrefhttps://medium.com/media/402258082637e4b1a0f4ac37fb40ebb1/href. https://medium.com/media/9a3e7311ea8657a6dcf4eae8f5fb46a0/hrefhttps://medium.com/media/9a3e7311ea8657a6dcf4eae8f5fb46a0/href.

Here, there are 6 instances for strong wind. However, it is required to transform numeric attributes to nominal in ID3. data-set with all females in it and then, In this split the whole data-set with Married status yes, In this split the whole data-set with Married status no. So, how to calculate the information gain?. We have thousands of contributing writers from university professors, researchers, graduate students, industry experts, and enthusiasts. They also build many decision trees in the background. We also use third-party cookies that help us analyze and understand how you use this website. Firstly, It was introduced in 1986and it is acronym of Iterative Dichotomiser. And Here We Go!. Entropy(Decision|Wind) ]. They all look for the feature offering the highest information gain. Down came 3 times in Old, thats why I have written 3. After calculating the entropy of each attribute, find the. Gini Index is a metric to measure how often a randomly chosen element would be incorrectly identified. Information GainWhen we use a node in a decision tree to partition the training instances into smaller subsets the entropy changes. 1. Decision Tree is simple to understand.2. Of course, I would be happy if you share these content in a classroom. The higher the entropy more the information content.Definition: Suppose S is a set of instances, A is an attribute, Sv is the subset of S with A = v, and Values (A) is the set of all possible values of A, thenExample: Building Decision Tree using Information GainThe essentials: Example:Now, lets draw a Decision Tree for the following data using Information gain. log2p(No) p(Yes) . So, in that case, the Competition attribute has high Information Gain as compare to Type attribute. Now we have to choose our Root Node. Functional cookies help to perform certain functionalities like sharing the content of the website on social media platforms, collect feedbacks, and other third-party features. This algorithm can be used for regression and classification problemsyet, is mostly used for classification problems. p = no of positive cases(Loan_Status accepted), n = number of negative cases(Loan_Status not accepted), There are two types in this male(1) and female(0). And according to the main dataset table, all the yes value belongs to Down, and the No value belongs to Up. Here, our Target variable is Fruit Type. This can be reduced by using feature engineering techniques. The input values are preferred to be categorical. Thats why we didnt take Type attribute. Your email address will not be published. The measure of the degree of probability of a particular variable being wrongly classified when it is randomly chosen is called the Gini index or Gini impurity. Now, you have a question What is Information Gain, Entropy, and Gain? At this point, decision will always be no if humidity were high. Besides, regular decision tree algorithms are designed to create branches for categorical features. Target Variable is nothing but a final result. Entropy= -[P/P+N log2 (P/P+N) + N/P+N log2 (N/P+N)], = -[ 5/10 log2 (5/10) + 5/10 log2 (5/10) ]. Now we calculate the Information Gain of Age Attribute. I hope, you understood. Analytical cookies are used to understand how visitors interact with the website. We have two popular attribute selection measures: 1. For instance, the following table informs about decision making factors to play tennis at outside for previous 14 days. C4.5: This algorithm is the successor of the ID3 algorithm. generate link and share the link here. The Formula for the calculation of the of the Gini Index is given below. 0.811 ] [ (6/14). So, we will discuss how they are similar and how they are different in the following video. So, decision tree construction is over. Even though compilers cannot compute this operation, we can compute it with calculus. 2. Now we have to check, which attribute has high information gain. Your email address will not be published. The formula for calculating information gain is-, Information Gain(Target Variable, Attribute) = Entropy(Target Variable) Entropy( Target Variable, Attribute), So, calculate the information gain of Age Attribute-, Information Gain(Profit, Age) = Entropy(Profit)- Entropy(Profit, Age). Thats why Choose Competition as the next node. Herein, ID3 is one of the most common decision tree algorithm. The final tree for the above dataset would be look like this:2. Here, you can find a tutorial deeply explained. Here, wind produces the highest score if outlook were rain. I do not understand your problem actually. 1- Gain(Outlook=Sunny|Temperature) = 0.570. The both random forest and gradient boosting are an approach instead of a core decision tree algorithm itself. Recursively construct each subtree on the subset of training instances that would be classified down that path in the tree. DeepFace is the best facial recognition library for Python. How Decision Tree in Machine Learning works? https://medium.com/media/402258082637e4b1a0f4ac37fb40ebb1/hrefhttps://medium.com/media/402258082637e4b1a0f4ac37fb40ebb1/href. https://medium.com/media/9a3e7311ea8657a6dcf4eae8f5fb46a0/hrefhttps://medium.com/media/9a3e7311ea8657a6dcf4eae8f5fb46a0/href.  Gain(Decision, Wind) =Entropy(Decision) [ p(Decision|Wind=Weak) . Decision would be probably 3/5 percent no, 2/5 percent yes. You can support this work just by starring the GitHub repository. https://medium.com/media/ab1b5a839737dafd70aef96a6506cb62/hrefhttps://medium.com/media/ab1b5a839737dafd70aef96a6506cb62/href. Then, it calculates the entropy and information gains of each atrribute.

Gain(Decision, Wind) =Entropy(Decision) [ p(Decision|Wind=Weak) . Decision would be probably 3/5 percent no, 2/5 percent yes. You can support this work just by starring the GitHub repository. https://medium.com/media/ab1b5a839737dafd70aef96a6506cb62/hrefhttps://medium.com/media/ab1b5a839737dafd70aef96a6506cb62/href. Then, it calculates the entropy and information gains of each atrribute.